What is Kubernetes?

In an orchestra, each musician has a part to play, but they all rely on the conductor to ensure they stay in tempo and play together to create the desired musical experience. In turn, each musician focuses on playing their specific instrument but is also part of a larger section of instruments dedicated to their role in the performance.

Similarly, Kubernetes is the “conductor” for modern software systems, automating container deployment, scaling, and management. Each musician is like a containerized application with a specific purpose. Kubernetes ensures these applications stay in sync, run efficiently, and scale easily to manage complex, containerized applications.

Read on to explore the technical details of Kubernetes, its key components, and why it is the foundation of many modern applications.

Key Kubernetes architecture and concepts

Just like the structure of an orchestra, Kubernetes has a well-defined architecture with various components that work together to manage workloads efficiently. To understand how our Kubernetes “orchestra” works, it’s important to understand the basic Kubernetes components first.

Key concepts:

- The Kubernetes cluster is the overall environment that contains one or more nodes.

- Each node is responsible for running pods.

- Pods are the smallest deployable units in Kubernetes.

- Pods can hold one or more containers.

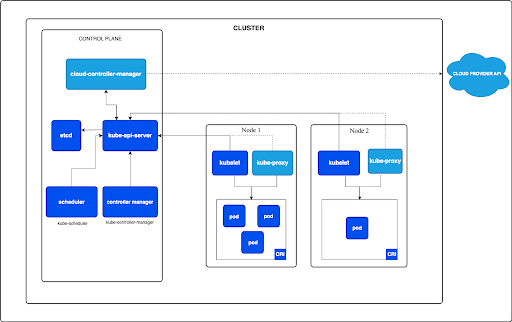

Here is a diagram that shows the relationship between the components listed below:

Containerization and microservices

Containers (the “musicians”) are isolated environments that hold an application and its dependencies together, allowing the application to run consistently across various environments. Docker is the most widely used containerization tool and works with Kubernetes to efficiently manage containers.

Microservices are a modern software architecture in which an application is broken down into smaller, loosely coupled services. Kubernetes provides a platform to deploy, scale, and manage each microservice efficiently.

Kubernetes nodes

A node is a computational unit. There are two types of nodes:

Master node

This is the “conductor” of the cluster. It schedules tasks, manages workloads, and maintains the desired state of the cluster.

Worker node

The worker node is where the action happens. These nodes run the containers that perform the actual work. Each worker node has the following components:

- kubelet: The kubelet ensures containers in a pod are running as expected and reports back to the control plane.

- kube-proxy: The kube-proxy handles traffic routing and load balancing, ensuring that requests from outside the cluster reach the right pods, much like directing the audience to the right section of the orchestra.

- container runtime: The container runtime is responsible for running containers. It functions like individual instruments that bring the music to life.

What are Kubernetes pods?

Pods provide the environment in which containers run, allowing them to share the same network space. Think of a pod as a small section of the orchestra, like the string section or brass section, where multiple instruments (containers) play together.

- Single-container pods are the most common type of pod and run a single application.

- Multi-container pods can host multiple containers that share the same resources. For example, several containers may share the same storage volumes or IP addresses.

Replication controllers and deployments

Replication controllers ensure that enough identical pods are running at any given time, much like ensuring enough musicians in the same section are playing the same part. Failed pods are automatically replaced by the replication controller.

Deployments are a more advanced form of replication controllers that offer rolling updates and rollbacks. They ensure that the application has the desired number of replicas and that updates happen smoothly.

Services

A service defines a policy for accessing pods and can expose them to external clients. Services in Kubernetes ensure you don't need to change your application to use a new service discovery method. There are several types of services:

- ClusterIP: Exposes the service only within the cluster.

- NodePort: Exposes the service on a static port across all cluster nodes.

- LoadBalancer: Exposes the service publicly through a cloud provider's load balancer.

- ExternalName: Maps the service to an external DNS name.

ConfigMaps and secrets

Kubernetes uses ConfigMaps and secrets to run applications smoothly and manage configuration data and sensitive information:

- ConfigMaps store non-sensitive, configuration-related data such as environment variables or database URLs.

- Secrets store sensitive information like passwords or API keys in an encrypted format to protect sensitive data.

Control plane

The control plane manages the cluster state and ensures everything is running properly. The key components of the control plane include:

- kube-apiserver: The front end for the Kubernetes control plane that exposes the Kubernetes API and communicates with the rest of the control plane.

- kube-scheduler: Determines where pods should be deployed based on available resources and constraints.

- kube-controller-manager: Maintains the desired state of the cluster.

- etcd: A distributed key-value store with critical details about the cluster's state, such as configurations and metadata.

Other concepts to know

Namespaces allow you to logically separate cluster resources. They help organize and isolate different environments (e.g., development, testing, production).

An ingress defines rules for routing HTTP/S traffic to the appropriate cluster services based on hostnames, URLs, or paths.

Network policies define the rules for communication between pods to improve security. They specify which pods can talk to each other and which cannot.

Key features of Kubernetes

There are several features of Kubernetes that make it such a staple of modern application architecture:

Self-healing

Kubernetes is designed to automatically detect and fix problems. If a pod or container fails, it is automatically replaced.

Resource management and horizontal scaling

Kubernetes allows you to define and manage CPU, memory, storage, and other resources each container requires to prevent resource conflicts. It is designed for massive scale with ease, whether you’re running a handful of containers or thousands. Kubernetes can manage large, complex systems, ensuring your applications can scale efficiently. Horizontal scaling automatically adjusts the number of pods based on resource usage (CPU, memory) to ensure the system can handle increased traffic or load.

Rolling updates and rollbacks

Kubernetes deploys new versions of an application without causing downtime. If something goes wrong, Kubernetes can automatically roll back to a previous version without interrupting service.

Service discovery and load balancing

Kubernetes makes it easy for pods to discover and communicate with each other. It also automatically balances the load across pods.

Flexibility

Kubernetes supports a wide range of workloads, from stateless applications to complex, stateful services. Whether you’re running batch jobs or microservices, Kubernetes adapts to your needs, offering flexibility in how your applications are managed.

Community support and ecosystem

Kubernetes is backed by a large, active community and has a rich ecosystem of tools and extensions. This ecosystem includes tools for monitoring (e.g., Prometheus), CI/CD (e.g., Jenkins), and advanced networking features (e.g., Istio), all of which help you optimize your applications and infrastructure.

A practical example with Kubernetes

Kubernetes, often abbreviated as K8s, is the most widely used container orchestration platform. It provides a robust framework for deploying and managing containerized applications at scale. Let’s walk through an example to illustrate how Kubernetes can be used to deploy and scale an application.

Defining a deployment

A Deployment in Kubernetes is a resource that specifies how a group of containers (Pods) should be managed. Below is an example of a YAML configuration file for deploying an NGINX web server:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

This configuration defines a Deployment named nginx-deployment that will maintain three replicas of an NGINX web server. Each Pod will run the latest version of the NGINX container and expose port 80.

Deploying the application

To apply the Deployment, save the YAML file as deployment.yaml and run the following command:

kubectl apply -f deployment.yaml

Kubernetes will create three Pods based on the defined specifications and ensure they are running on available nodes in the cluster.

Exposing the application

To make the application accessible outside the cluster, you need to create a Service. Here’s a YAML file for a Service that exposes the NGINX Deployment:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Apply this Service using:

kubectl apply -f service.yaml

Kubernetes will assign a load balancer that routes external traffic to the Pods running the NGINX containers.

Scaling the application

Scaling is one of Kubernetes' most powerful features. To scale the application to five replicas, use the following command:

kubectl scale deployment nginx-deployment --replicas=5

Kubernetes will immediately add two more Pods to meet the new desired state.

Conclusion

Kubernetes conducts the modern cloud-native orchestra by efficiently orchestrating containers. Its capabilities make it the go-to platform for managing containerized applications at scale. By automating deployment, scaling, and management tasks, Kubernetes simplifies the complexity of running large, distributed systems.

Whether you're building a simple web app or a complex microservices architecture, Kubernetes provides the tools to keep your application running smoothly, with the flexibility, scalability, and reliability needed to meet modern demands.