AI is no longer a futuristic concept—it’s becoming an everyday tool. Open-source models are evolving at an unprecedented pace, while optimizations are driving down hardware requirements. Today, even lightweight AI models can run efficiently without a GPU, making advanced AI accessible for a wider range of applications than ever before.

At bunny.net, we see this shift as part of a bigger picture. The cloud, built on layers of complexity, has long been a barrier to innovation. That’s why we created Magic Containers—a platform designed to strip away that complexity and make global deployment effortless. Now, we’re taking that vision further by demonstrating how DeepSeek R1, a powerful open-source model, can run seamlessly on Magic Containers.

While running DeepSeek on Magic Containers is fun, it also opens doors to real-world applications:

- Educational purposes – Experimenting with AI inference without specialized hardware.

- Smarter IoT devices – Deploying AI-powered decision-making at the edge.

- AI-driven game NPCs and chatbots – Running lightweight AI models for in-game non-player characters (NPCs), interactive storytelling, or player support bots in online games, reducing latency and enabling dynamic, real-time interactions.

And this is just the beginning. With advances in model efficiency and hardware acceleration, AI inference at the edge is set to become cheaper and more accessible than ever.

Let’s dive into how you can deploy DeepSeek on Magic Containers in just a few simple steps. If you get stuck or have questions on the steps below, don’t hesitate to contact our knowledgeable, fast, and friendly Support team!

From Docker image to live AI inference in a few clicks

Before we start, let’s describe what we need to make our DeepSeek inference work:

- [OPTIONAL] Prepare and build a Docker Image that will expose an API to communicate with DeepSeek.

- Deploy the docker image.

For the first step, we utilize Ollama, a lightweight framework optimized for running open-source AI models like DeepSeek efficiently. It's like a smart translator that helps us talk to DeepSeek effortlessly, handling all the heavy lifting in the background.

By the way, all the code described below can be found in this repository: https://github.com/BunnyWay/magic-deepseek-demo, including a GitHub Action that automatically builds the image. Feel free to fork and deploy any model you want.

1. [OPTIONAL] Preparing the Docker image

As we mentioned earlier, this step is optional. You can use the pre-built image and move directly to Step 2. However, if you’d like to explore how to prepare a Docker image for this use case, let’s dive in!

The Dockerfile for this deployment is incredibly simple:

FROM ollama/ollama

ENV APP_HOME=/home

WORKDIR $APP_HOME

COPY . .

RUN chmod +x pull.sh

ENTRYPOINT ["/usr/bin/bash", "pull.sh"]

This builds a container with DeepSeek R1 and sets up the execution environment.

Since Ollama requires models to be fetched before execution, we include a startup script (pull.sh) to handle this automatically:

#!/bin/bash

# Start Ollama server in the background

ollama serve &

# Wait for Ollama server to start

sleep 5

# Pull DeepSeek R1 model

ollama pull deepseek-r1:1.5b

# Wait for the Ollama server to finish

wait $!

This script ensures that when the container starts, it fetches the model, runs Ollama, and serves the inference API.

Once the Dockerfile and script are ready, build the image and push it to a container registry:

docker build -t ghcr.io/bunnyway/magic-deepseek-demo:latest .

docker push ghcr.io/bunnyway/magic-deepseek-demo:latest

We use GitHub Container Registry (GHCR) to store the image, making it instantly deployable on Magic Containers.

2. Deploying Ollama API

Now comes the magic. Instead of dealing with complex cloud providers, Magic Containers lets you deploy in seconds.

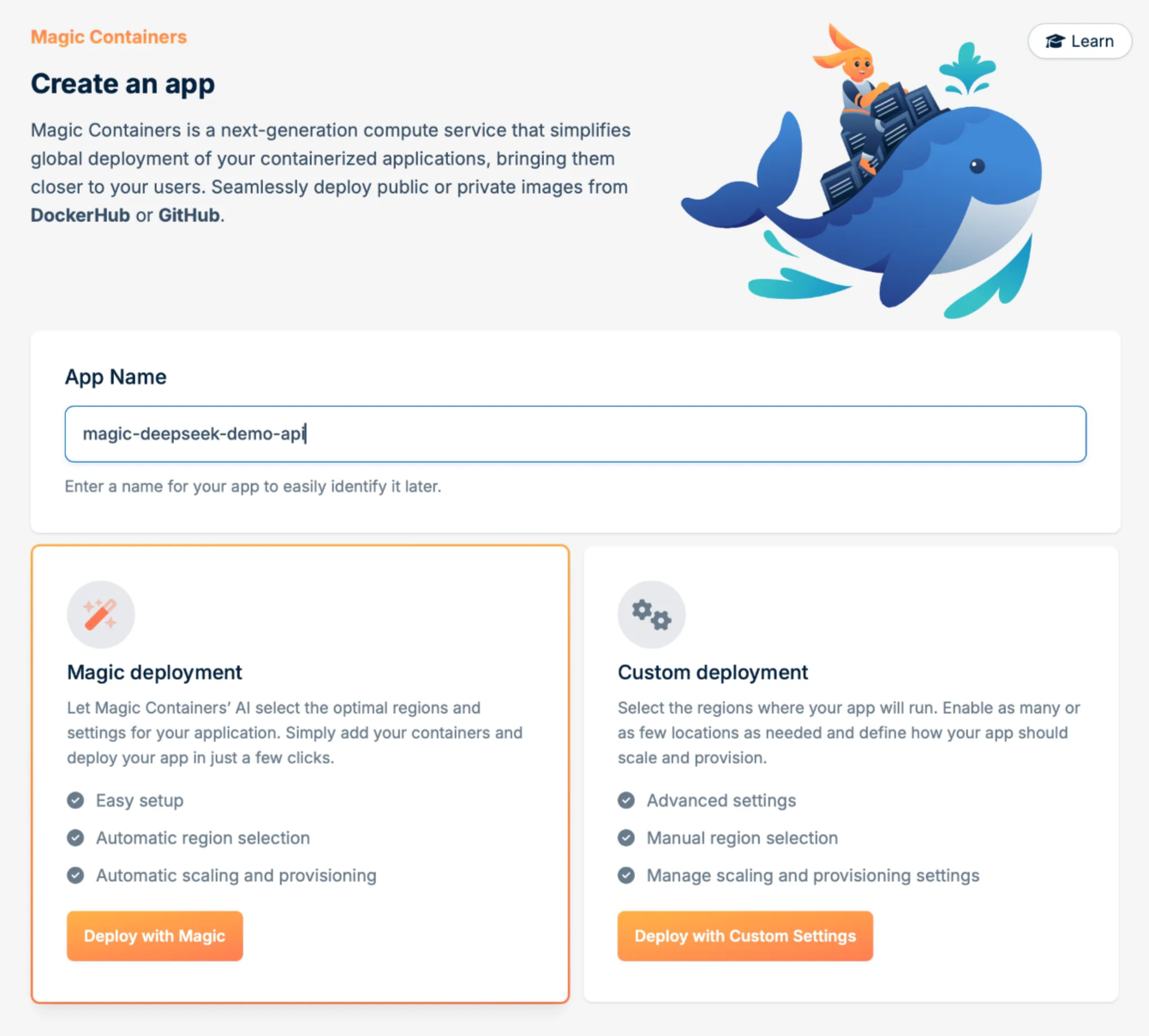

Choose the Magic Containers product in the dashboard and create a new app by clicking Add App.

Give your app a name; we will use magic-deepseek-demo-api and choose Magic deployment. This type of deployment will automatically provision our app around the globe, close to our users. This is especially beneficial for inference, as we want to stream responses quickly from the nearest point of presence.

Now we need to choose image. You can use an image you have built yourself, or you can choose the image which we prepared for you already. If you prefer to use our image from our repo, continue with the steps in this section.

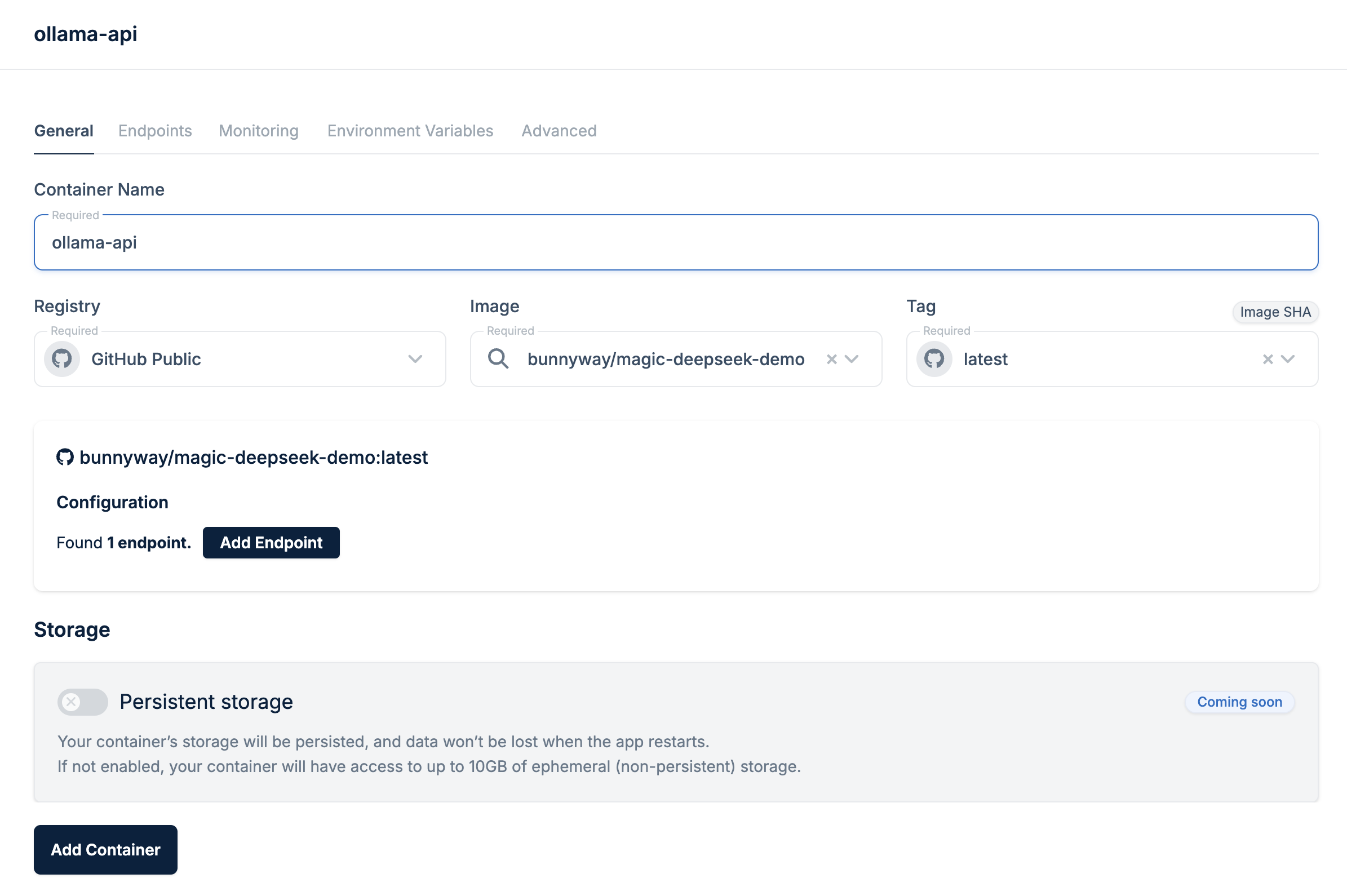

Next, as shown in the screenshot below, enter the following details to test using our public image:

- Container name: ollama-api

- Registry: GitHub Public

- Image: bunnyway/magic-deepseek-demo

- Tag: latest

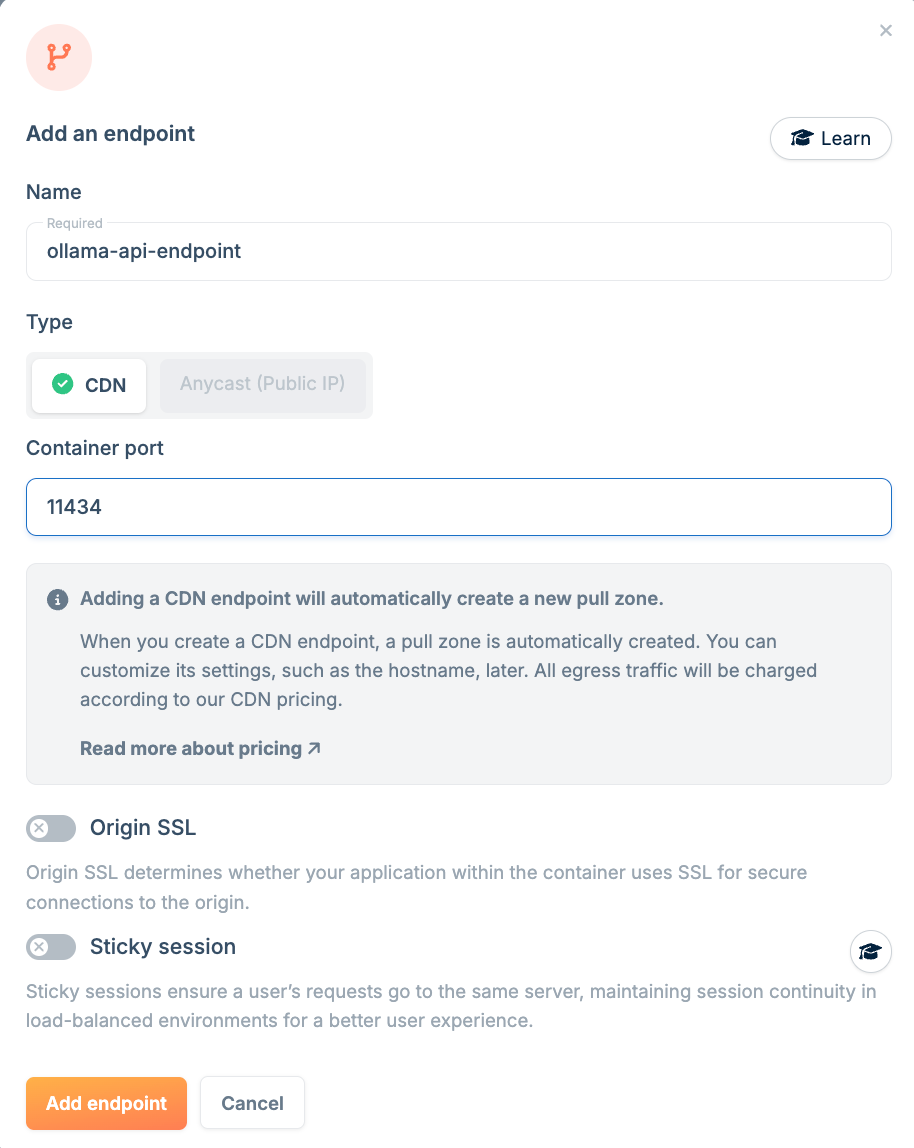

llama uses port 11434 to expose its API. Click Add Endpoint, then click Go to Endpoints to create a CDN-based endpoint to make it publicly accessible. Once in the Endpoints menu, click Add New Endpoint and fill out the following details as shown in the screenshot below:

- Name: ollama-api-endpoint

- Container port: 11434

Click Add Endpoint, then click Add Container.

Finally, click Next Step, review your settings, and click Confirm and Create.

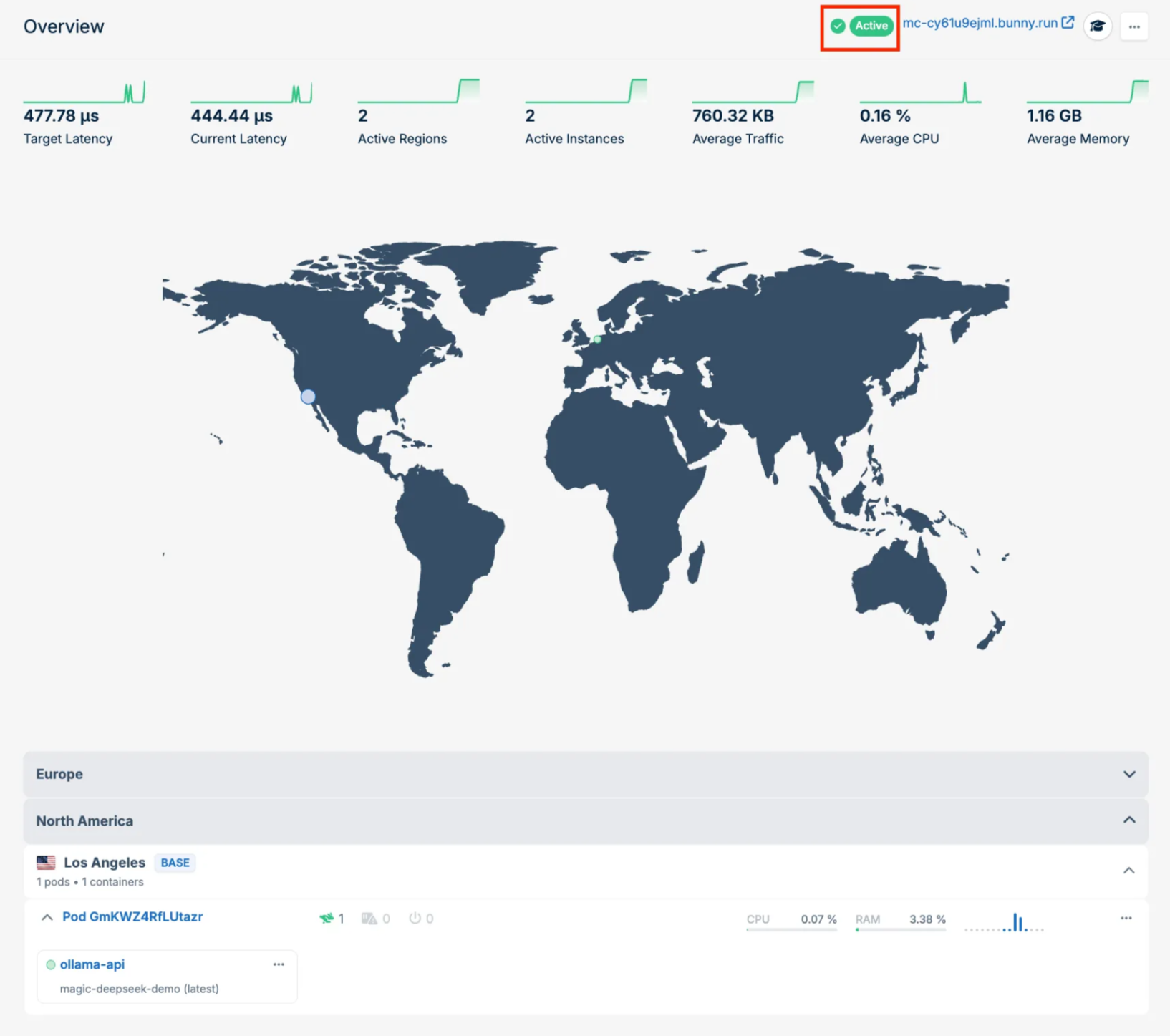

In just a few minutes, the app will be up and running, as shown in the screenshot below. Once it's ready, you'll see the green Active indicator on the analytics screen, just like in the screenshot below:

And that's it! The inference API is now available at the endpoint displayed at the top of the screen.

3. Accessing the API

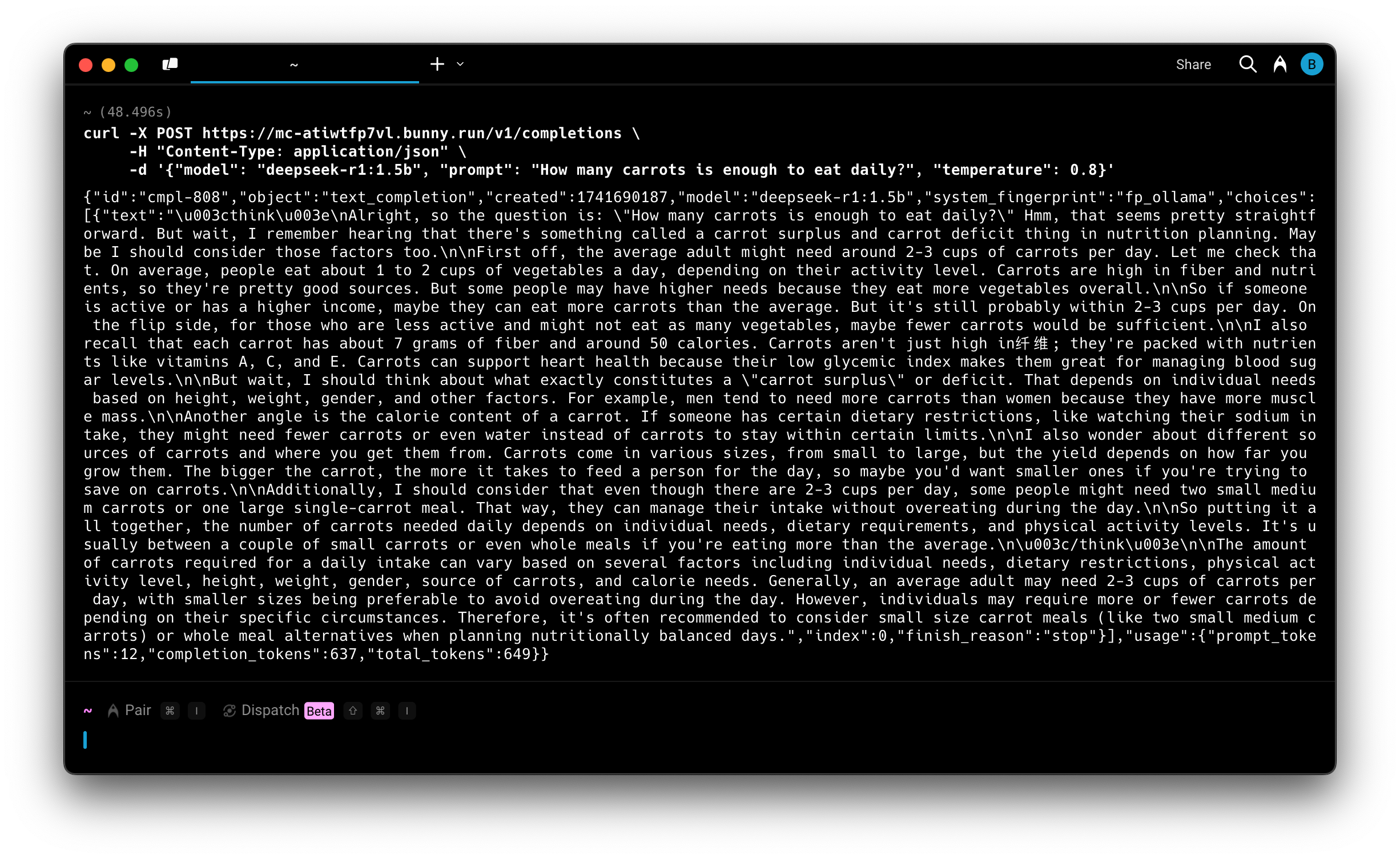

Let’s test what we have deployed using cURL:

curl -X POST https://<your-mc-host>/v1/completions \

-H "Content-Type: application/json" \

-d '{"model": "deepseek-r1:1.5b", "prompt": "How many carrots is enough to eat daily?", "temperature": 0.8}'

Here's what it should look like:

4. Deploying the UI

Not everyone wants to interact with AI using HTTP APIs, so let’s also add a pretty UI.

For the UI we have chosen https://github.com/open-webui/open-webui because it is easy to deploy and works with the Ollama API.

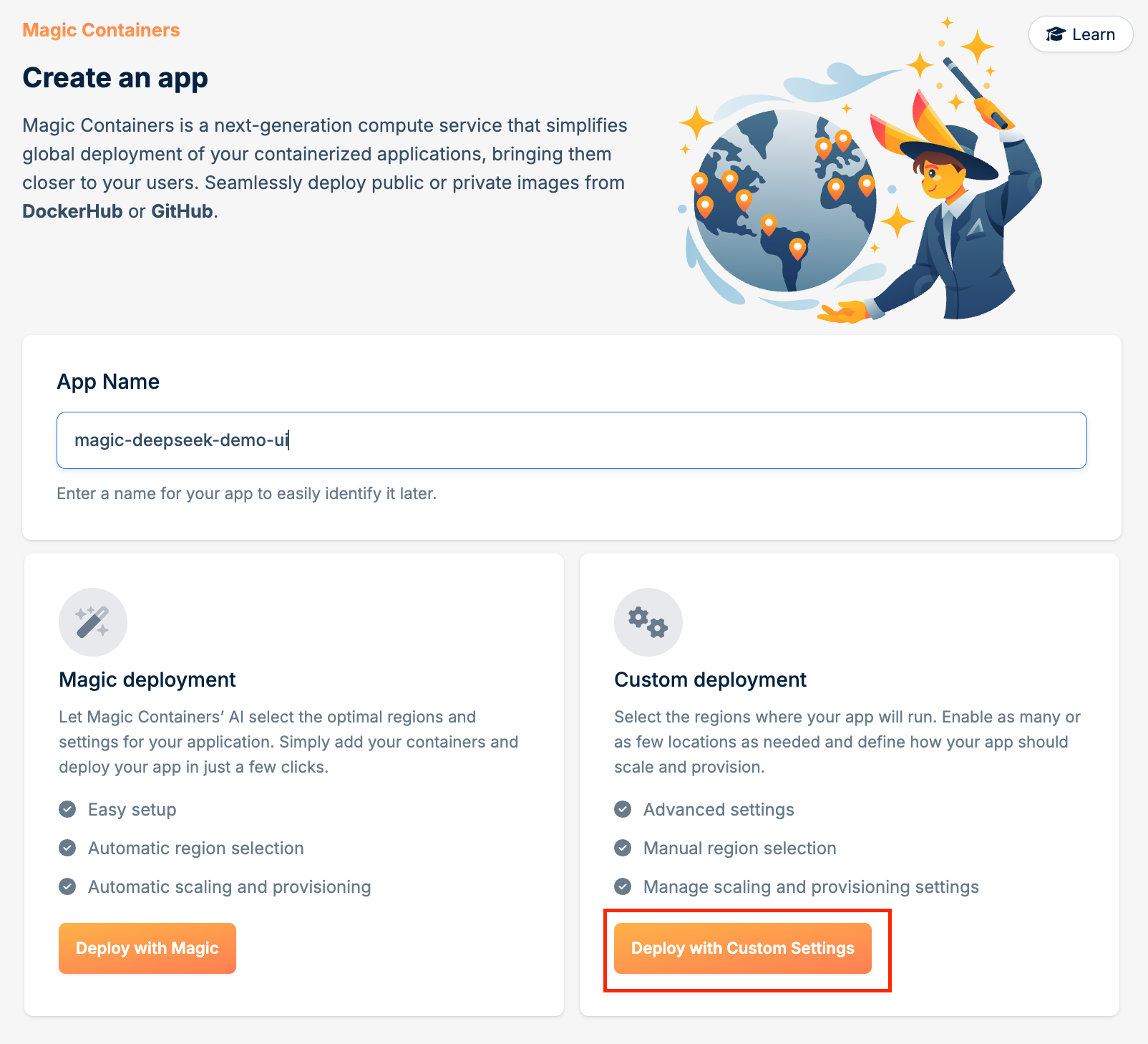

In the main Magic Containers tab of your dashboard, click + Add App. This time, we will choose Custom Deployment because this UI requires persistence. While Magic Containers does not yet support persistent volumes, the container will retain all necessary data until the next restart, which is sufficient for demo purposes.

It is also worth mentioning that the UI will be a separate application, as it doesn’t require the same orchestration as the Ollama API.

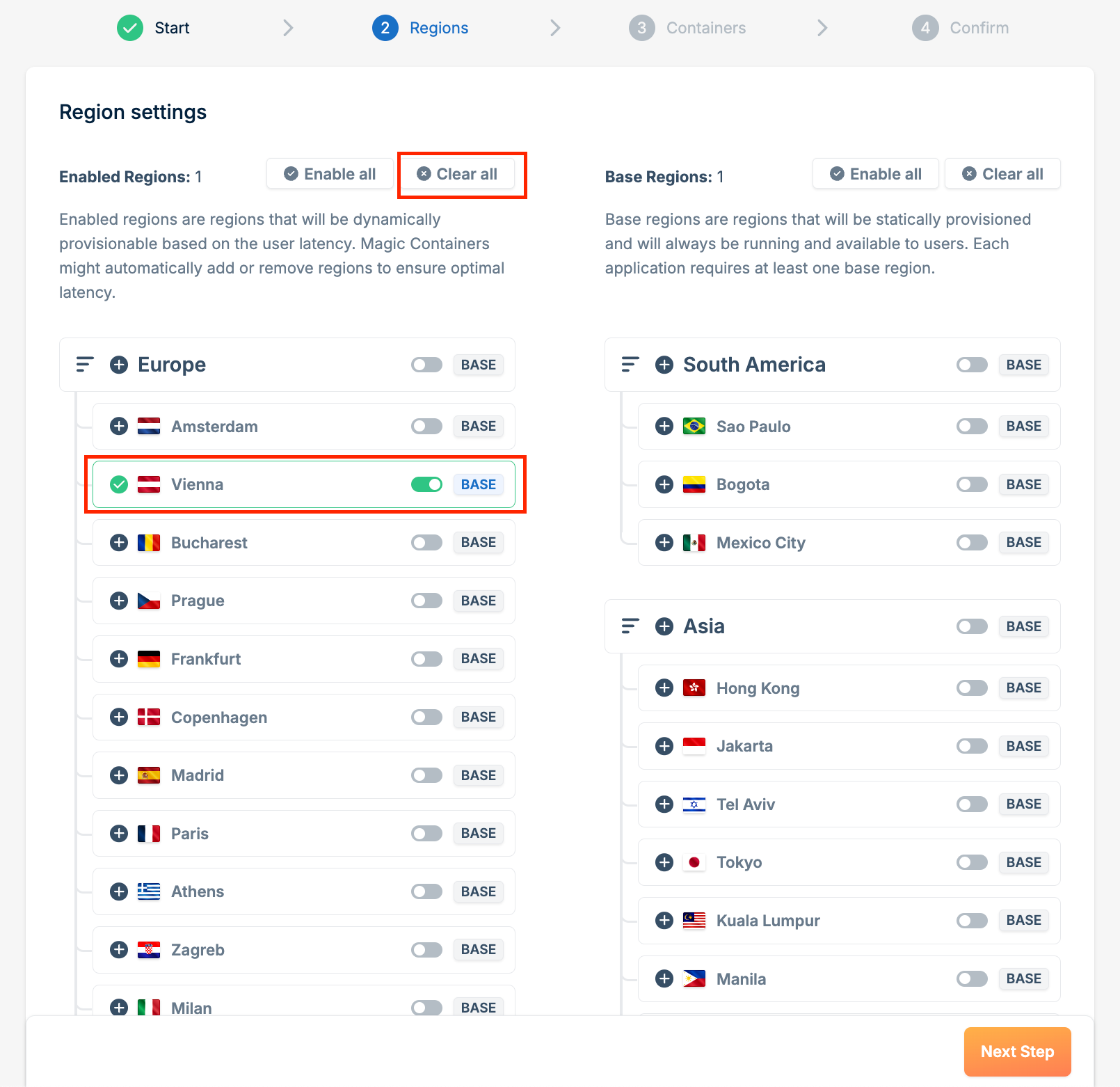

Click Clear All near Enabled Regions, then choose any region you want, but only one. In our screenshot below, we have selected Vienna. Ensure that BASE is toggled from gray to green for that region, then click Next Step.

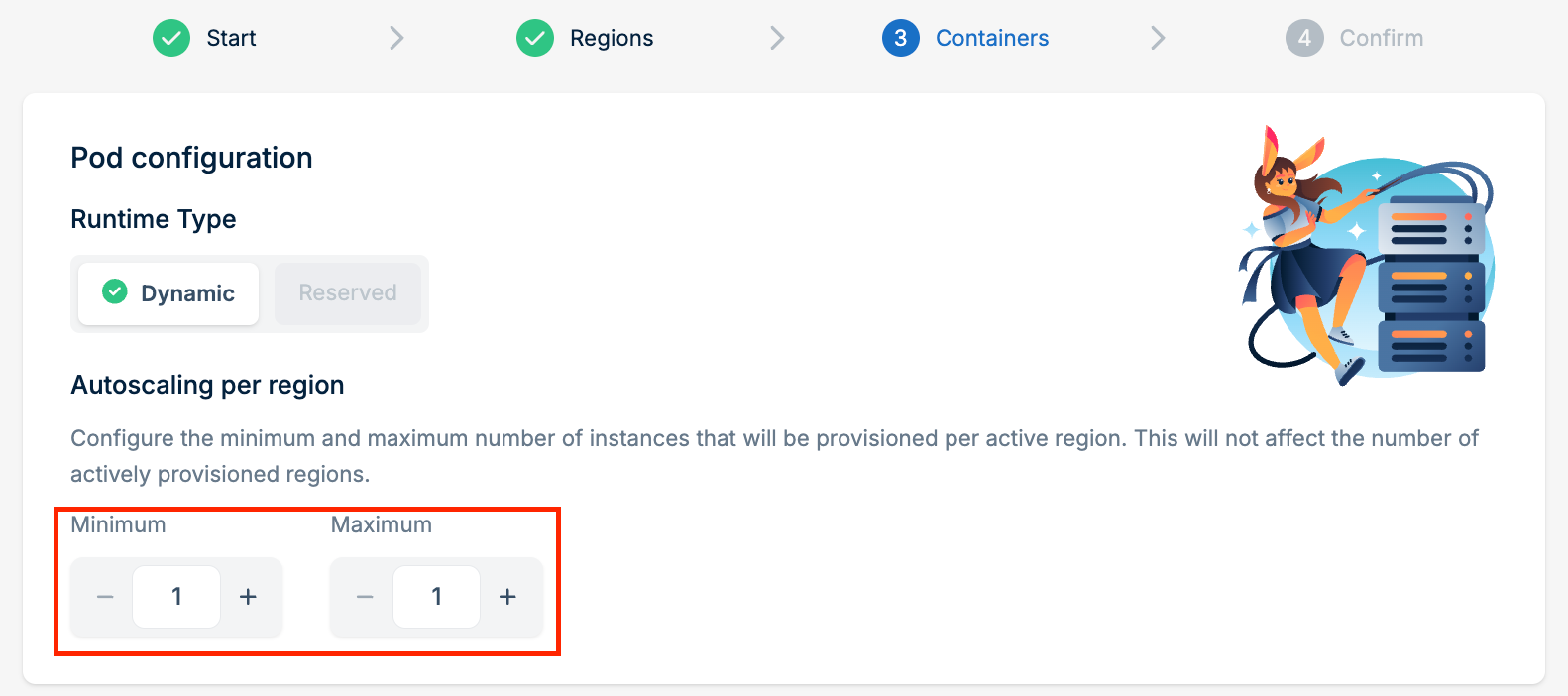

Set Minimum and Maximum Autoscaling to 1 to ensure that all requests go to the same container, which retains the necessary data.

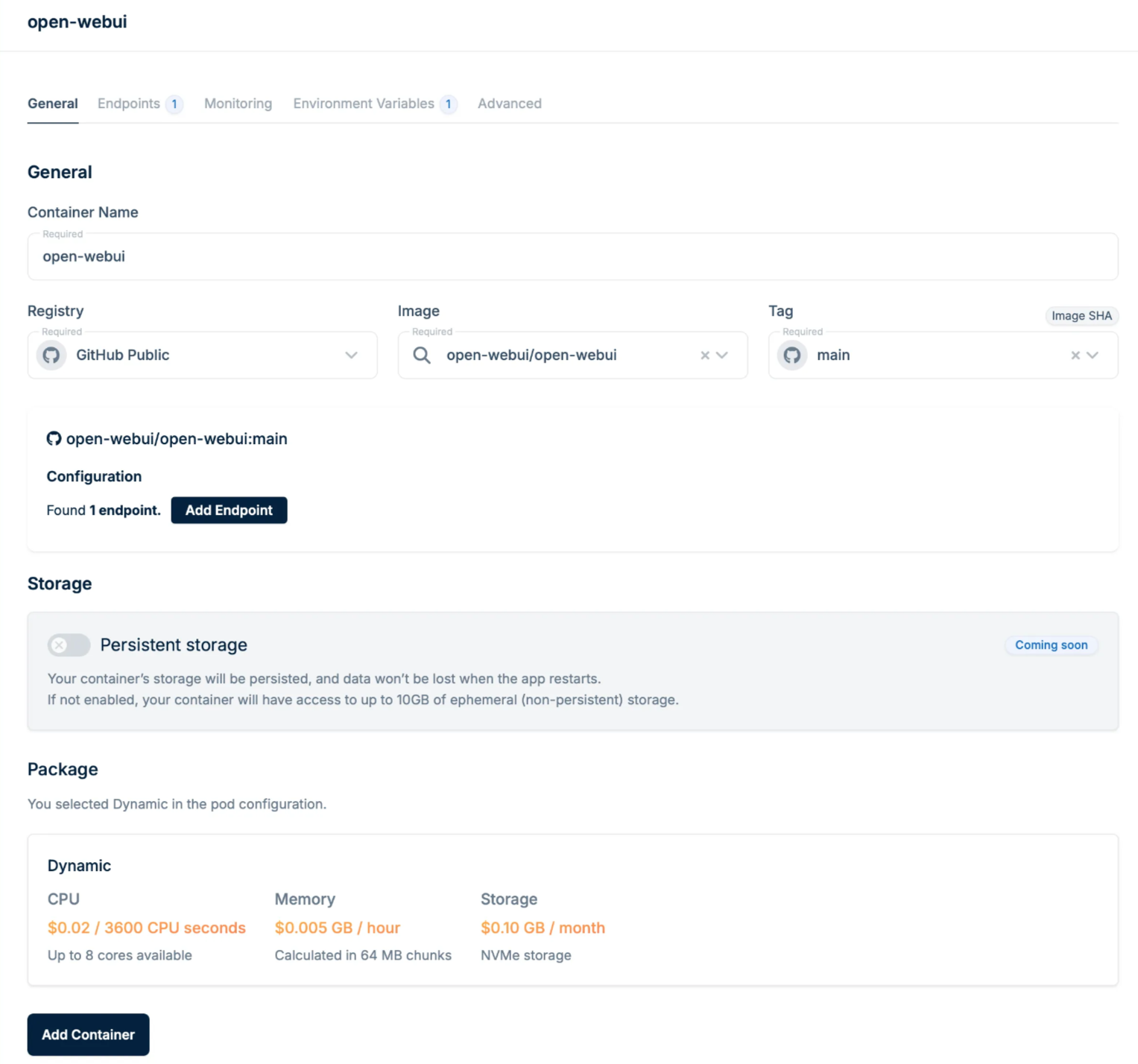

As shown in the screenshot below, enter the following details:

- Container name: open-webui

- Registry: GitHub Public

- Image: open-webui/open-webui

- Tag: main

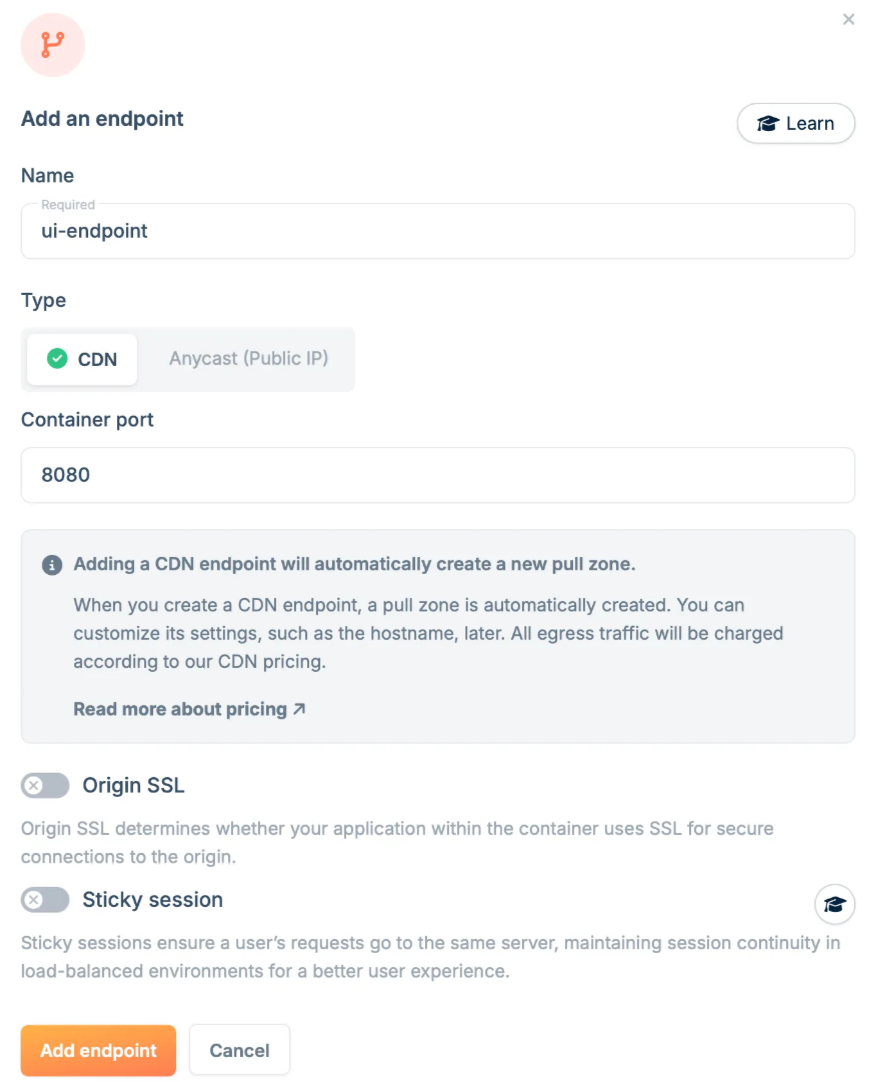

Click Add Endpoint and configure the UI endpoint. The OpenWebUI interface listens on port 8080, so we will set it as the container port for our CDN endpoint.

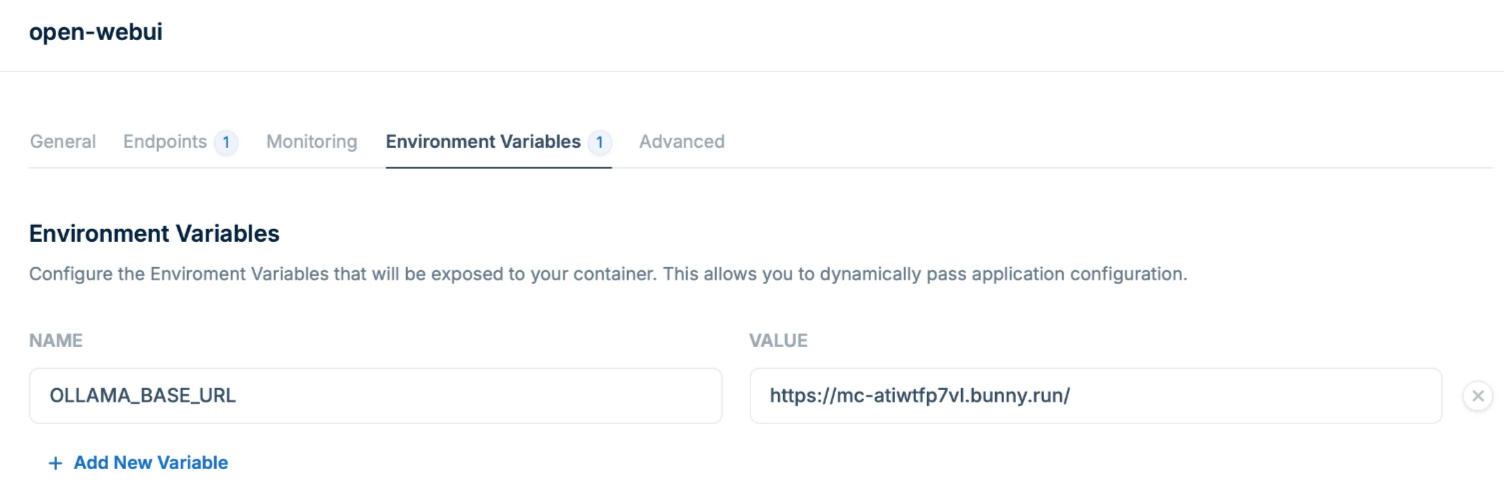

To get responses from the DeepSeek model, the WebUI needs to be configured with an Ollama API URL. To set this up, copy the HTTP endpoint URL created for the Ollama app and paste it into the environment variable OLLAMA_BASE_URL.

Just like in the step for deploying the Ollama API, do the following:

- Click Add Container, then click Next Step.

- Review the settings you see on the screen, then click Confirm and Create.

- Wait for the container to be deployed and the status to be Active.

You are amazing! You've successfully completed all the steps! Now, let's check our freshly deployed UI.

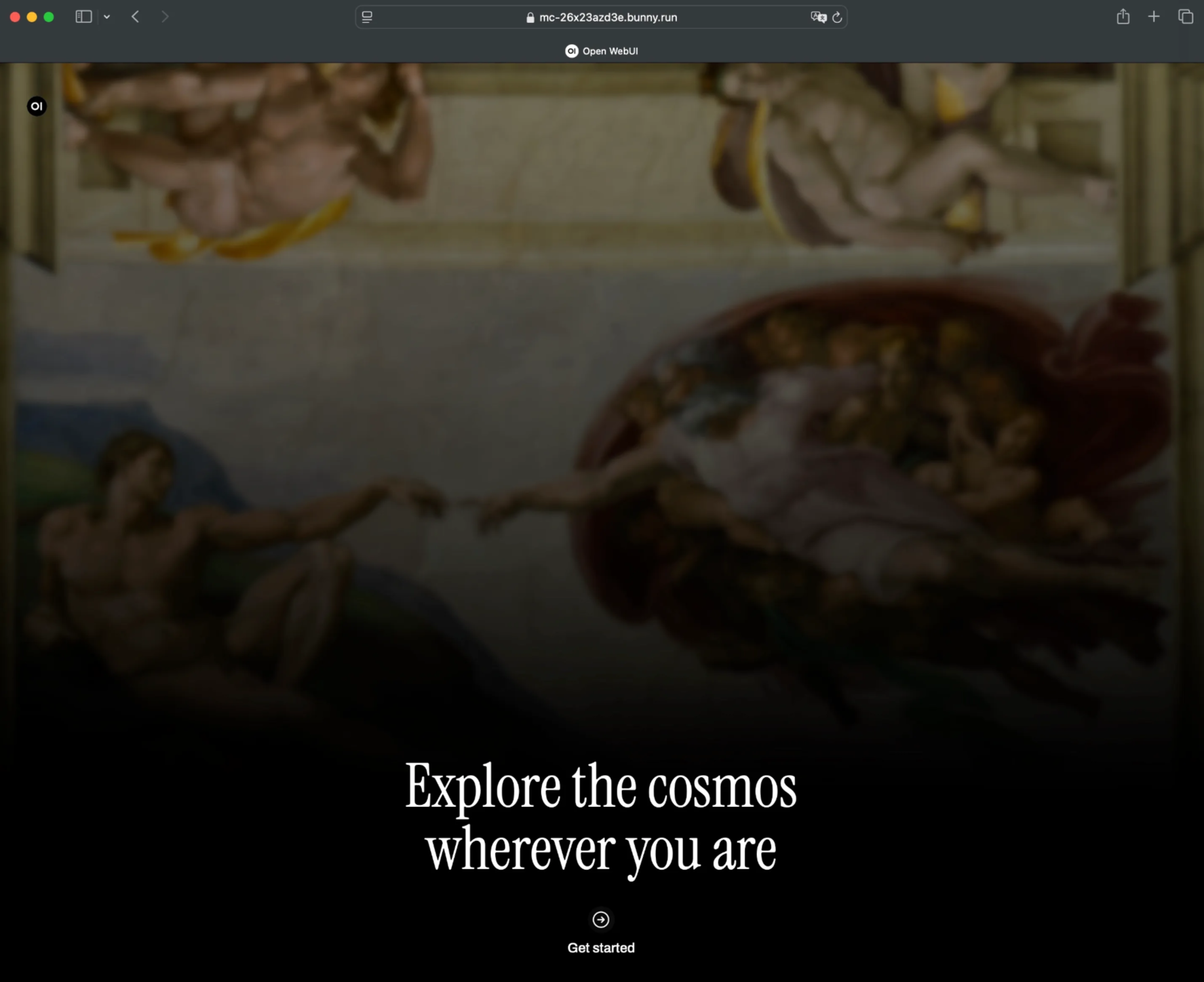

6. Checking UI

Now, we can take a look at the UI we deployed.

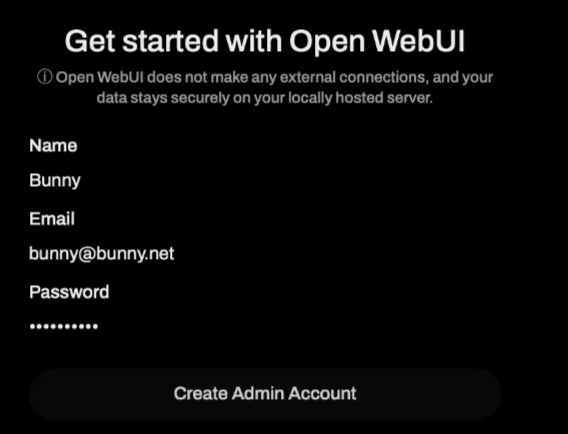

Let’s create an account:

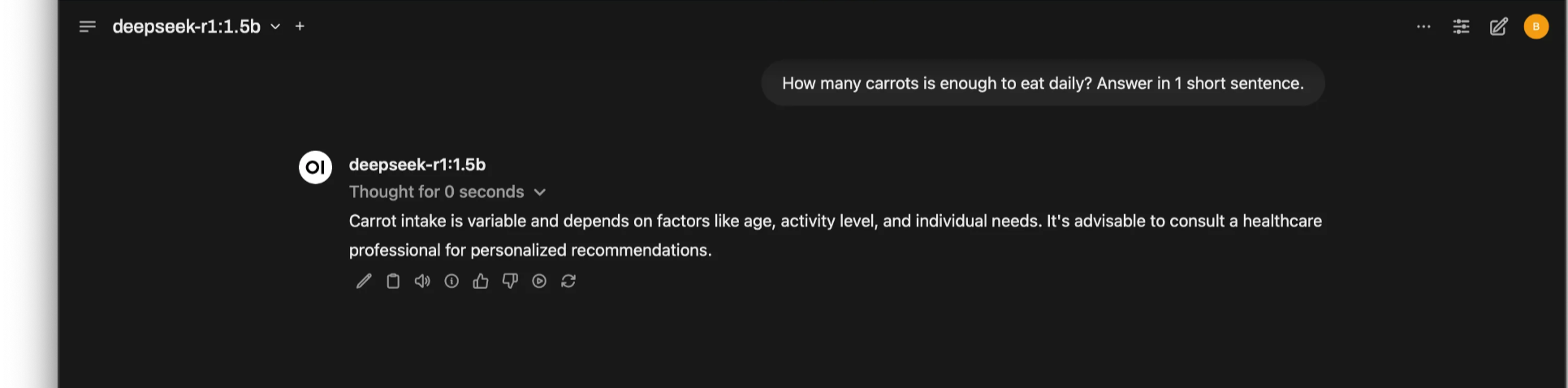

And now, it’s time to query DeepSeek!

Time to enjoy the results!

Here's a quick recap of what we've accomplished:

- Deployed the Ollama API globally, running the DeepSeek R1 model.

- Set up a user-friendly UI to easily query DeepSeek.

- Connected the UI with the API, ensuring seamless interaction.

- Put it to the test by asking DeepSeek how many carrots we should eat daily. 🥕

And the best part? All of this was done in just a few clicks!

Understanding the limitations

While deploying DeepSeek R1 on Magic Containers is an exciting showcase of AI inference at the edge, there are a few limitations to keep in mind:

- Authentication is disabled for simplicity – This guide omits authentication for demonstration purposes, but real-world applications should integrate security measures.

- Stateless execution – The deployment does not persist data, so users may need to re-authenticate periodically. We are actively working on adding persistence, and it is our number one priority.

- Performance constraints – Without GPU acceleration, inference speed is limited, making it suitable for lightweight workloads but not large-scale AI applications.

Despite these constraints, this deployment demonstrates how effortless it is to deploy applications globally. And this is just the beginning—full GPU support is coming soon to Magic Containers, unlocking high-performance AI inference worldwide.

The future: AI at the edge with Magic Containers

Deploying DeepSeek R1 on Magic Containers is just the beginning. As AI models become more efficient and hardware continues to advance, running inference at the edge will become faster, cheaper, and more accessible. And with GPU support on the horizon, Magic Containers will soon unlock even more powerful AI workloads.

Ready to experiment with AI inference with Magic Containers? Get started today and see how simple deploying AI at the edge can be. Need help exploring Magic Containers? Contact our fast and friendly Support team rated 4.9 out of 5 stars on Trustpilot.