Content delivery on a massive scale often comes with massive challenges. One of these is controlling the load on the origin servers. Usually, most systems are designed with a centralized origin service that is responsible for producing the required content for the CDN to pull and cache.

This works great when the content can be largely reused but much less so when this content is required to be dynamically generated on the fly, such as live video streaming or high-throughput APIs. One caching mistake, and the origin can be hit with tens or even hundreds of Gbit of traffic.

Today, many content delivery networks including, bunny.net rely on the highly-popular Nginx server. Nginx offers universal features that allow efficient, high-performance content caching and distribution on a massive scale.

To help control the load on the origin, Nginx offers request coalescing through a cache locking mechanism called proxy_cache_lock. The system allows you to lock requests for the same resources and only allow one request to pass over to the origin at the same time. Subsequent requests are then loaded directly from cache. This allows the CDN to control the flow of requests and make sure a large wave of traffic does not overload the origin server. However, while this can successfully reduce the load on the origin, various flaws make it unsuitable to use in a next-generation CDN environment.

Flaws With Nginx Cache Locking

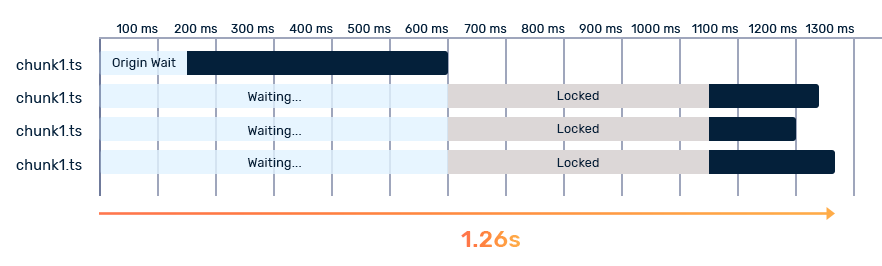

With the proxy_cache_lock system, the Nginx server will detect an uncached request and proceed to send a single request to the origin. Once a response is received, it will then start caching the response by writing it to the disk. While the response is being processed, any further request to the same file will be locked and left waiting for the original request to fully complete. Only once the original request is fully finished and cached will the waiting requests be processed.

While this achieves the desired effect on reducing the load on the origin, it comes with at a massive cost in performance due to high wait times where no data is being transferred to other clients at all.

To make matters worse, Nginx only unlocks cached requests in 500ms intervals. In our testing, we found an average added latency of locked requests added to a whopping 450ms when loaded in a sequence. This means requests can easily end up sitting and waiting in the queue for up to a second or more before even receiving a reply.

Not something we would expect when trying to deliver video with just a few seconds of latency. The increase in TTFB alone is enough to cause buffering in low-latency video delivery situations or waste precious time when calling a real-time API with quickly changing data such as with financial or sports information.

While this alone is enough to make Nginx cache locking not suitable for modern CDN usage, it actually gets even worse. By design, Nginx performs cache locking on a worker level, which usually means one worker per CPU core. Effectively, a beefy CDN server with multiple CPUs could in theory let up to 80 requests go straight back to the origin. While this is less dramatic in the real-world, in our experience, this limitation alone is enough to easily cause chaos.

At bunny.net, we are on a mission to help make the internet hop faster. To power next-generation planet-scale content delivery, we knew we had to do better. After struggling with the nginx built-in cache locking, we wanted a better solution to unlock the true performance of a caching edge network. So as always, we went ahead and built one.

Fixing Request Coalescing With Real-Time Request Streaming

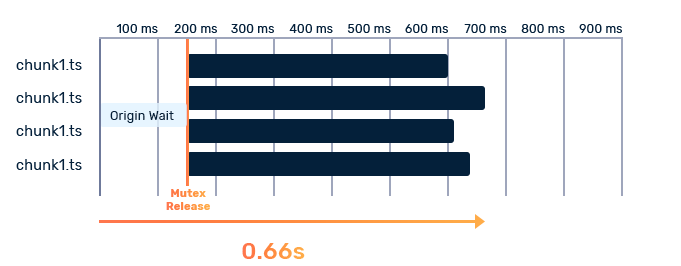

Every millisecond we waste is another millisecond longer your users need to wait for their content. Our goal was to reduce the overhead to 0 ms and have all requests stream back in real-time, no matter which order they arrived in.

To achieve that, we completely changed the locking logic. We disabled Nginx based locks and moved the locking mechanism from the cache layer to the proxy layer. Now, whenever we receive a request that isn't cached, we set up a mutex lock and send a request to the origin. Every additional request to the same file is then locked with the same mutex. As soon as the origin responds, we connect all of the waiting requests with the response stream of the origin.

This allows for true real-time request streaming from the origin without locks or increased TTFB. It drastically improves end-user experience and response times by effectively reducing any overhead with locking. As soon as the origin replies, all requests are responded to immediately. You can see the start difference between the two charts.

We've set a goal of 0 ms locking, and we've done it. In our testing, especially with smaller files, we saw TTFB time reductions by over 90% due to the lock timers.

Thanks to the new Request Coalescing feature in Bunny CDN you can now power massive levels of traffic without affecting the performance or wait times. You can guarantee excellent playback experience for your users at every point of the way, be it a cached or uncached request, with 5 viewers, or 500,000 without ever worrying about your origin or added latency.

Making the internet hop faster!

We are very excited about powering content on a massive scale. The results of the new request coalescing feature exceeded our own expectations and allowed us to solve a lot of the legacy CDN issues to power the true next-generation of internet apps and content.

The new Request Coalescing feature is already available under preview under the caching settings of your pull zones. The goal is to launch this into general availability over the next few weeks. We invite anyone doing live streaming or busy public APIs to give it a go and let us know how it goes. We're thrilled to hear about your results.

Join us in powering the next generation of content delivery!

At bunny.net, we are on a mission to make the internet hop faster. That means solving problems on a massive scale and making them as simple as a click of a button. If you're passionate about networks and content delivery make sure to check out our careers page and drop us a message. We're constantly looking for additional team members to help us build and power the internet of tomorrow.