Modern web frameworks helped us build the web we dreamed of, rich, reactive, alive. React, Vue, Angular, Blazor, and others made shipping beautiful experiences a joy.

But there’s a catch. These sites look beautiful to humans, but search engines, and now increasingly AI models, don’t ship a pair of eyes and a brain. When answering a question or a query to deliver instant results, they don’t have time to wait, click, download your framework, populate dynamic data, and hope your content appears on time.

The result? Content gets lost. Faster sources get prioritized, and your pages never get indexed. ChatGPT ends up finding its answers from a different website. And so, your hard work stays hidden behind a spinner, silently swallowed by the noise.

The web was built for people. But times have changed, and if you want visibility today, it must also be optimized for bots. For a long time, this meant the joys of modern frameworks were penalized, and you had to make a choice.

But what if there was a world where you could have both?

Introducing: Bunny Optimizer Page Prerender

Today, we’re giving you the best of both worlds with the launch of Page Prerender to help you turn your JavaScript‑heavy pages into instant, discoverable, bot optimized HTML. Automatically.

Page Prerender captures a clean, fully rendered HTML snapshot of each of your pages. It then automatically serves it to search engines, AI crawlers, and social preview bots, making everyone happy in the process.

Your users still get your dynamic app. Crawlers get the fast, static HTML they need, and AIs can quickly interact with your website to get answers. In short, everyone wins.

The best part? The only thing needed is to flip a switch. Zero rewrites. Zero code changes, zero framework migrations, and zero custom middleware.

Bunny Optimizer does everything for you, so you can keep building with the modern frameworks you love. We’ll do the rest and make sure it’s served lightning fast, always, and to everyone.

Prerender in action: hop.js example

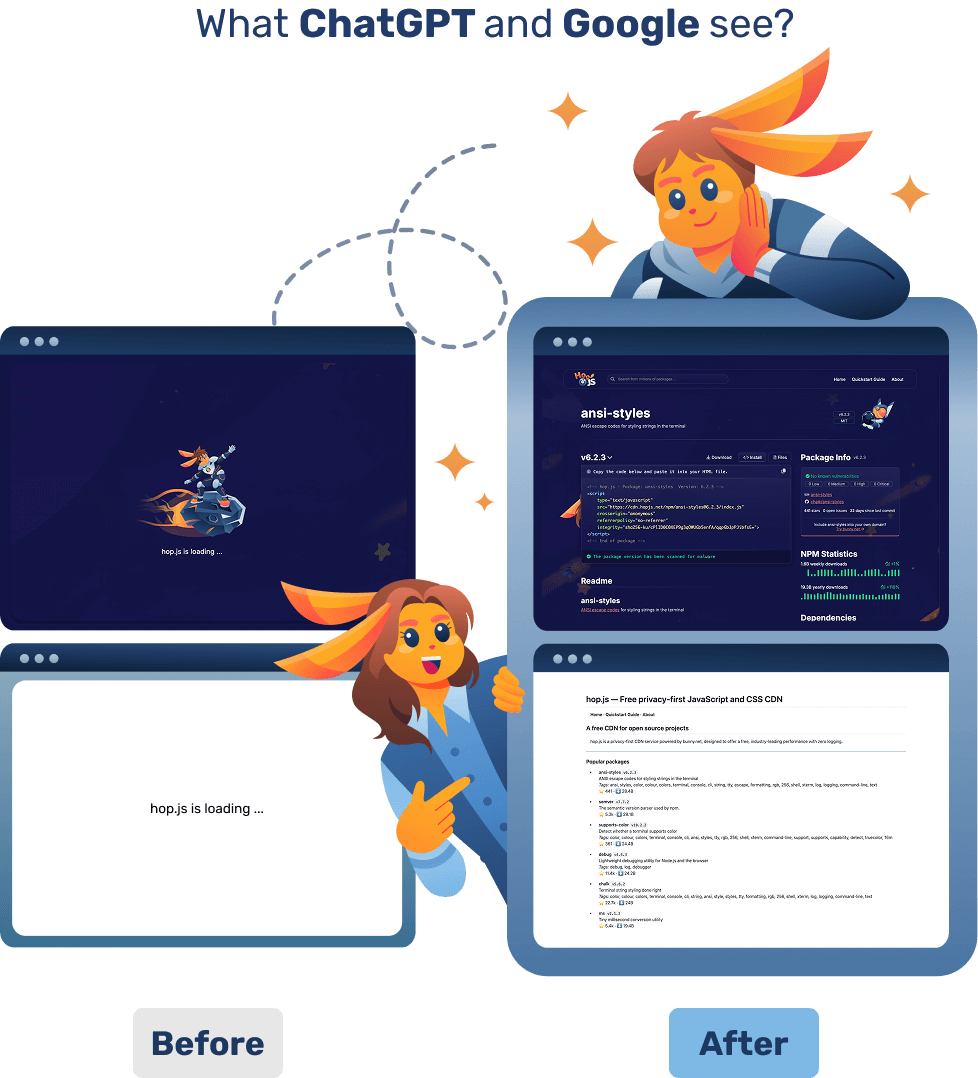

We felt this pain ourselves. We built hop.js using Blazor. It looked great, had fancy loaders and animations, and it was API‑first.

Users loved it, but crawlers? Not so much. Because Blazor runs in WebAssembly, the first page load can be less than optimal, and while we serve human users a cute bunny animation, crawlers just saw a loading message.

A flick of a switch later, with Page Prerender enabled, hop.js instantly gained super SEO powers.

Under the hood:

- The same pages became instantly parsable by crawlers

- Headings and metadata became available with no extra configuration

- Crawler page load time was reduced by almost 99.9% down to milliseconds

Up to 95% better visibility in search

It’s not that crawlers and AI bots aren’t smart enough to execute JavaScript. They simply don’t have the time to do it, especially under load. Users demand instant answers, and if your content is locked behind a bunch of code and dynamic calls, models and crawlers simply miss it.

Page Prerender changes that by serving clean static, fully rendered HTML to them, so they get:

- Immediate content: Headings, links, and copy arrive in the first response. No waiting, no client boot.

- Optimized crawler formatting: We simplify the DOM and normalize critical tags for predictable parsing.

- AI-ready pages: Large language model crawlers see your content and structure immediately, improving understanding and coverage.

- Massive performance boosts: Faster responses reduce crawl budget waste and increase pages discovered per crawl.

For heavily dynamic sites, this can make the difference between existing or not existing on the internet and can result in up to 95% better visibility in search, and AI models.

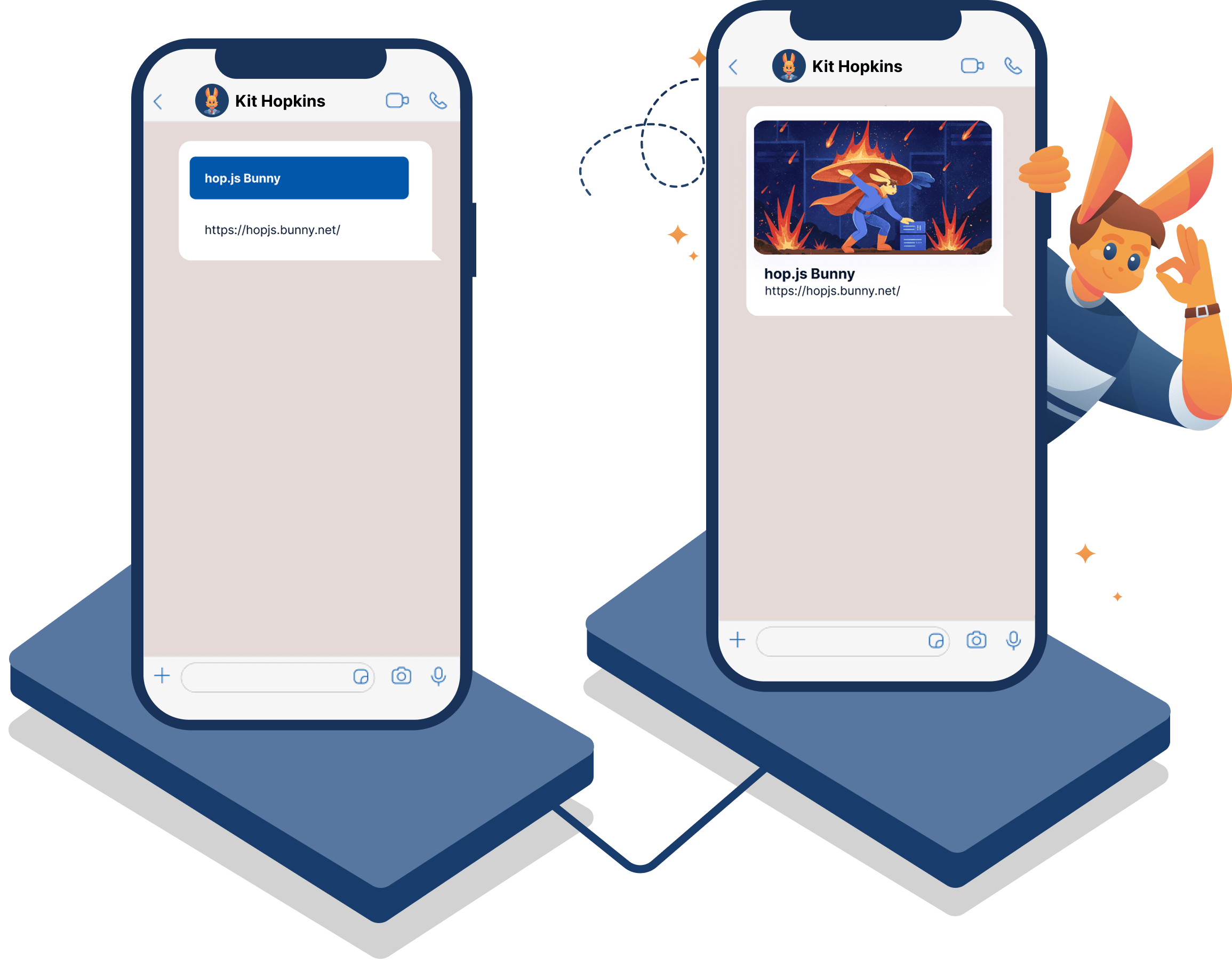

Optimized social media link previews

Ever shared a link and got an empty or wrong preview? Many social platforms don’t run JavaScript. Instead, to provide a smooth experience, they rely on Open Graph or custom metadata tags. If they aren’t there, your previews won’t be either. For dynamically rendered sites, the tags might never get rendered at all until the JavaScript code executes.

With Page Prerender, this problem disappears:

- OG and X meta tags are present at first byte

- Link unfurls show the correct title, description, and hero image

- Your brand looks polished on X, LinkedIn, Facebook, Slack, Discord, and more, consistently

For you, it means more clicks, fewer broken shares, and a preview you’re proud of.

Who is this for?

Bunny Prerender is a perfect for any site where getting content out to the world is your number one priority, especially if JavaScript can cause delays or load dynamic sections.

Perfect for:

- Headless CMS sites (Contentful, Sanity, Strapi, Ghost) rendered client-side

- SPAs and hybrid apps built with React, Vue, Angular, Svelte, Solid, Next/Nuxt in CSR-heavy modes

- E‑commerce catalogs where product details render after hydration

- Docs, blogs, and knowledge bases with dynamic components

- Marketing & campaign pages that must index fast and preview perfectly

- SaaS apps with public pages (pricing, changelogs, status, help center)

If you’re already doing SSR, SSG, or ISR, that’s great. Page Prerender still helps by ensuring crawlers and unfurlers consistently receive a clean, static HTML response from the edge, even when client interactivity is heavy.

How it works (without the headaches)

- Detect: We identify crawlers and bots based on their user agents and create a special cache key.

- Render: We generate an HTML snapshot of your page in an actual browser along the same route your users see.

- Optimize: We normalize critical metadata (title, description, canonical, OG, X tags) for reliable parsing.

- Cache at the edge: We cache snapshots globally and revalidate based on your cache headers, purge rules, and content updates.

- Serve the right version: Users get your dynamic app. Crawlers and social bots get fast, static HTML.

For you, this means no changes, no special routes, and no additional complexity, besides turning it on.

Built for integrity, not SEO black hat magic

Page Prerender wasn’t built to trick search engines or AI into liking your page more. It was built to help search engines, LLMs, and bots consume your content in a more optimal way, exactly how your users see it.

It keeps content identical across devices, with these principles:

- No cloaking: We serve semantically equivalent content; the prerender is a faithful, fully rendered view of your page.

- Respectful crawling: We honor robots.txt and meta directives.

- No SEO manipulation: We only optimize delivery, never alter your content structure or add keywords to game rankings.

- Developer-friendly: Works with any origin behind Bunny Optimizer, including Bunny Storage.

Ready for your website to get discovered?

We’re making Page Prerender available to everyone, starting today. It works with any origin behind Bunny Optimizer, including static apps hosted on Bunny Storage, and turning it on takes three simple steps:

- Open your Pull Zone → Optimizer settings.

- Enable HTML Prerender.

- Publish, and watch your visibility hop.

We’re excited to help you build the future and help make it easy for the world to find!