When we set out to reimagine edge computing, we didn’t want to create just another platform that forces you to rebuild your applications in yet another framework. We wanted to build a service that felt like magic; one that would allow developers to keep using their favorite tools and frameworks. We wanted to allow anyone to deploy any existing or new application, regardless of the programming language. Anywhere in the world. In just a few clicks.

To deliver this experience, we had to solve four major challenges: performance, security, efficiency, and flexibility. A lot of research and community conversations all led in the same direction: Docker.

Today, we wanted to share why we chose Docker as the foundation for Magic Containers and why we believe it’s the best way to deploy complex applications at the edge.

What is Docker, and why is it so popular?

Before we begin with the deep dive, let’s first explore what Docker is, and why developers love using it.

Building and running software can be a tricky endeavor. One missing package, a different OS version, or outdated dependency, and you might be looking at unexpected crashes, hours of debugging, and a lot of unnecessary frustration; especially when moving applications between local, staging, and production environments.

Docker solves this by creating a lightweight, standardized environment package called an image. This image includes everything needed to run your application and ensures that it behaves the exact same way across any kind of environment, whether it’s your laptop, beefy cloud server, a Raspberry Pi, or even a home NAS.

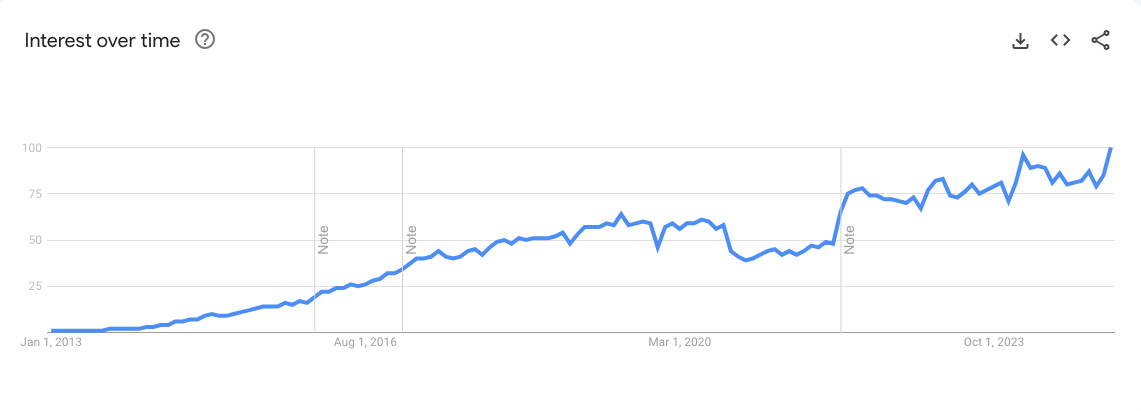

Of course, with “Well, it worked on my machine.” being so common that it became a global meme, developers are taking notice, and Docker is exploding in popularity and quickly becoming the de-facto choice for packaging applications. Here’s a Google Trends chart to show how interest in Docker keeps quickly growing over time.

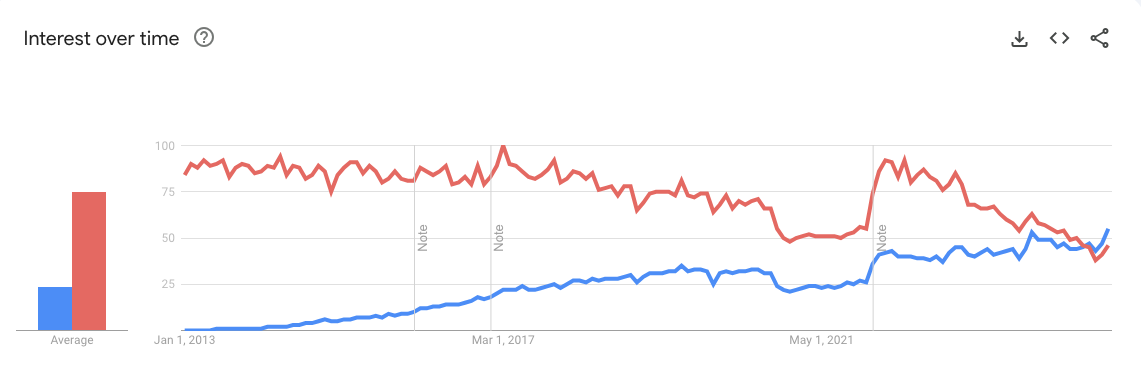

Recently, the interest in Docker even overtook JavaScript, one of the most popular programming languages in the world.

The message was clear: developers were already building on Docker, and if we wanted to help anyone run anything, anywhere, Docker was the obvious choice.

But at bunny.net, we don’t believe in adopting a technology just because it’s popular. We choose it because it’s the right one. Beyond its popularity, let’s dive deeper into why Docker is also the perfect fit for our vision of edge computing and how we used it to make the whole process feel like magic.

Portability: Build once, deploy anywhere

The core reason why developers love to use Docker is also the core reason why we found it an ideal candidate for running applications at the edge. Complete portability means that developers can package any application, test it locally, and then deploy it globally. Across any kind of hardware, infrastructure, or cloud provider; while knowing exactly how it will behave there.

This allows Magic Containers to reliably run any application. With zero modifications needed. Unlike other edge computing services that force you to rewrite your app on the latest shiny runtime, then emulate it locally, and hope it still works in production, Docker allows you to bring anything live and work in the exact way you would expect without ever changing a single line of code.

Most importantly, by using Docker, we're also saving developers from vendor lock-in. We want our users to use bunny.net because it’s a platform they love, not because they have no other choice. Operating with this level of portability keeps driving us to continually push the bar even higher and build exceptional products, not proprietary runtimes, that keep customers coming back.

Ecosystem: Bundle in up to 14M+ public images

Modern complex applications don’t just need a runtime and executable to run. They usually rely on entire ecosystems of supporting software. This made Docker a perfect candidate as it easily allows you to package literally any application, any dependency, or any framework alongside your own code.

If it runs on Linux, it runs on Docker.

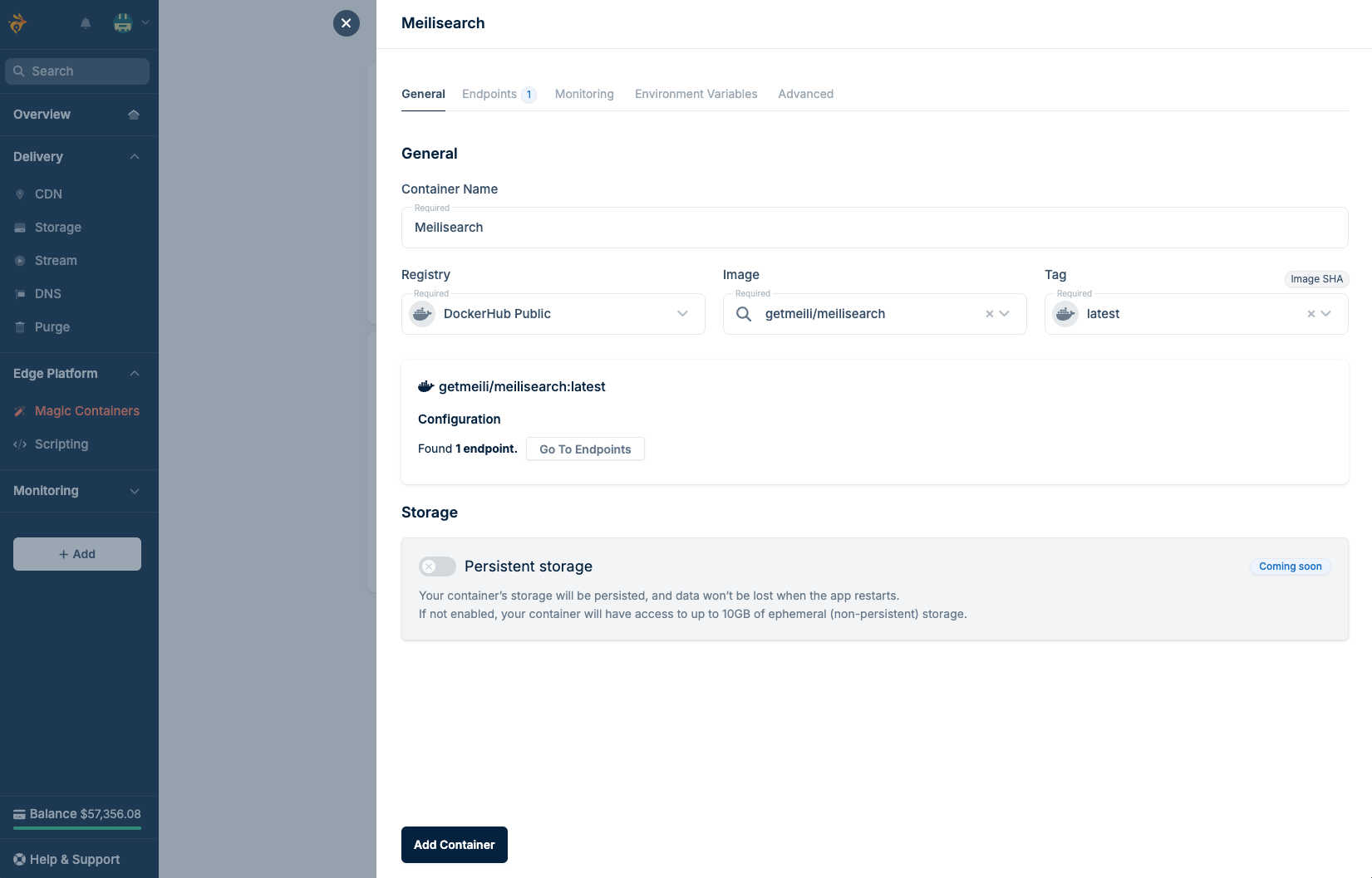

With over 14 million public Docker images available, chances are your favorite database, caching system, messaging queue, and other applications are all just a few clicks away. For example, deploying Meilisearch, an open-source search engine, along with your application takes less than 30 seconds.

Magic Containers lets you select multiple images and run them as containers within a single pod. While isolated from the outside world, these containers can then communicate internally as if they were all running on a single machine.

This architecture makes it simple to build and scale complex multi-container applications at the edge and tap into a massive ecosystem of open-source components, tools, and services ready to help you power your applications.

DevOps workflows: CI/CD out of the box with existing tools

For Magic Containers to feel truly magical, it needed to seamlessly integrate with the tools that developers already know and use without introducing yet another packaging workflow. Most teams today rely on CI/CD pipelines to automate their build and deployment workflows, and thanks to the popularity of Docker, those are, more often than not, already built around containers.

We wanted to build a platform that developers could adopt without having to rethink their entire deployment and build strategy. Whether you’re using GitHub Actions, CircleCI, Jenkins, or GitLab CI, Magic Containers seamlessly integrates into existing workflows since all it needs is a Docker image to run.

If you can push a Docker image, you can deploy with Magic Containers. It’s that simple.

Security: Full system-level isolation

Security in modern cloud computing can quickly turn into a black box, so this was a very important area for us, especially when deploying untrusted applications in a shared environment.

While Docker only provides a basic level of isolation that isn’t suitable for running untrusted workloads in a shared environment, it offers an extensible sandboxing architecture that we could build upon.

To make Docker work for Magic Containers, we added an additional sandboxing layer that adds complete isolation through a dedicated user-space kernel, ensuring your applications run completely isolated from both the host system and other containers. Each pod operates within its own secure environment, with a dedicated system layer, networking, and storage resources. This complete isolation means that each deployment is effectively running with full control over its own resources and no ability to interfere with or access other containers or the host system.

Security was not just an afterthought but a core foundational decision we took when building Magic Containers, ensuring enterprise-grade security without worrying about bad neighbors.

We're currently working on a dedicated, in-depth blog post about security, so stay tuned if you're interested in learning more.

Networking: TCP, UDP, HTTP—Docker supports it all

Modern applications rely on more than just HTTP, yet many serverless platforms limit you to just that. With Magic Containers, we took a different approach. We wanted to give you the ability to run absolutely anything, anywhere.

Unlike limited edge runtimes, Docker was the perfect choice. It provides full control over networking, with support for TCP, UDP, and, as a result, HTTP out of the box. Your application gets exactly what it needs without unnecessary restrictions.

Thanks to Docker's low-level customization support, we gained full control over networking configuration, which allowed us to provide fully isolated network namespaces for every pod while maintaining shared networking between each container within the pod. This allows for seamless cross-application communication while providing complete isolation between pods and the outside world.

By being able to seamlessly connect between applications, Docker helped us simplify service discovery significantly, and now connecting to a database is as straightforward as specifying a localhost address and port.

Performance: Consistency without compromise

At bunny.net, we're all about performance, so whatever base we chose to build on had to be fast. Really fast, and Docker didn't disappoint.

Unlike traditional virtual machines that rely on heavy virtualization and hypervisors, Docker provides an efficient, lightweight runtime that avoids the overhead of full virtual machines. This allows applications to start almost instantly and run with near-native performance. And with careful optimization of the container runtime, we've managed to achieve performance that rivals bare metal deployments while maintaining all the benefits of containerization.

When we built Magic Containers, we didn’t want yet another platform that ran tiny applications quickly and then shut them down. We wanted a powerhouse of performance that could deliver consistent performance for both CPU and IO-heavy workloads.

This made Docker the perfect choice, as it provides near-native performance for IO memory management.

The future of edge computing is here

Edge computing was supposed to make it easier to deploy applications next to your users. Instead, it created additional security concerns, even more complexity, and lock-in.

With Magic Containers, we’re changing that.

By building on Docker, we’re giving developers the freedom to work with the tools they already know and the opportunity to take them global with just a few clicks, without having to rewrite from scratch.

If you’re ready for your deployments to start feeling like magic, hop over and give Magic Containers a go and let us know how it goes. We'd love to hear your thoughts and feedback to build the future of cloud computing together.