When we launched Bunny Stream in 2021, we did something bold. In a world where companies existed solely to sell video transcoding, we decided to change the status quo by introducing a video service that provides a full end-to-end solution for video delivery, where the only fees you pay are the bandwidth and storage costs.

Our users loved it, and Bunny Stream took off. With all that growth, the infrastructure had to scale as well. Today, after hundreds of millions of uploaded videos, Bunny Stream operates a massive cluster of over 500 servers working away 24/7 and continues to grow every month.

Free should not mean subpar

We introduced free encoding not just to help users reduce costs, but also to provide a seamless end-to-end experience without all of the traditional headaches that usually happen with video delivery at scale. In a world where "free" often comes with compromises, we were determined to deliver a service that remained exceptional without cutting corners on quality.

Yet, despite all of the new hardware we kept adding, we continually got reminded that encoding videos is simply a very resource-intensive process as we occasionally ran into long processing queues. To address that, we worked year after year on new optimizations. From smart video queuing and encoding optimizations to parallel processing and better error handling, we gradually pushed performance forward.

But today, as that title implies, we're excited to share something much bigger.

Twice the performance, zero the cost

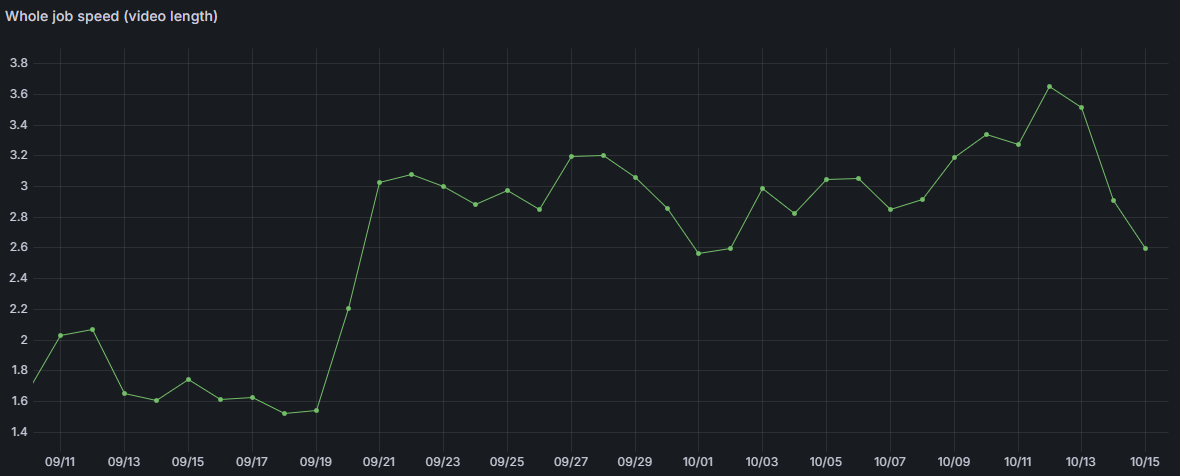

Thanks to two major updates that we launched in September and October, we've just doubled the transcoding capacity for Bunny Stream! These updates include a significant overhaul of our hardware and a critical optimization of our video storage system. Together, they have resulted in a massive improvement in the video processing pipeline.

For you, that means your videos now process twice as fast as they did just a few months ago, and best of all, this comes at the same old price of free.

Here's how we did it.

Distributed storage load balancing

For a while, we noticed that transcoding speeds weren’t scaling linearly with newly installed hardware. It turned out that with all of our computing power at our disposal, we were able to produce such high amounts of data that the bottleneck became the video storage system itself.

Bunny Storage, similarly to encoding, runs in large clusters of hundreds of servers per region. While uploading a file, these massive clusters work together to try and find an ideal destination for the upload. During this process, we take many factors into account, including server load, connection count, and more, which allows us to efficiently write tens of Gbits of files per second across the cluster, but even that wasn't fully enough for the loads that Bunny Stream was producing.

When debugging slow areas of video encoding, we realized some encoded chunks were uploading slower than others and occasionally really slowed to a crawl. After a lengthy investigation, we learned that the load-balancing logic had one major flaw while trying to factor in all these metrics. All of the servers were performing this logic independently. In some situations, this led to many API servers deciding to write to the same optimal servers at the same time, which quickly slowed things down.

To address this, we introduced a new, distributed load-balancing system that runs on each of our storage servers. Initially developed for close-to-realtime global security information synchronization for our CDN, we now built this system into storage. The system now allows us to share real-time data across every Bunny Storage API server and communicates routing decisions to every other API server, significantly improving the load-balancing process. With this update, the cluster now operates as a single distributed load balancer, making significantly better decisions than tens of servers independently.

This change in load balancing resulted in up to 10X improvement in file writes during stressful situations and massively improved not just Bunny Stream but the ability for Buny Storage to write data fast. Really fast.

As a result, this played a significant impact on the whole encoding pipeline, almost instantly increasing the encoding throughput by as much as 2X once this update went into production.

Switching to the latest Intel-based CPUs

The second piece of the performance puzzle was the encoding hardware.

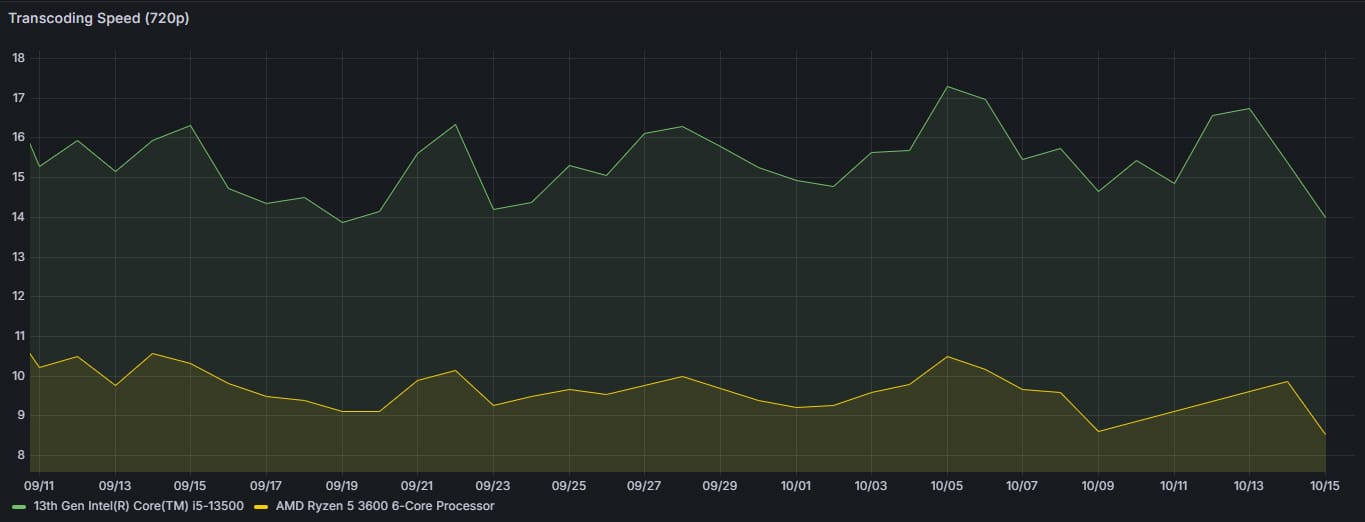

Historically, Bunny Stream was powered by AMD Ryzen 5 3600 CPUs. Thanks to our optimized hardware stack, these CPUs offered an excellent price-to-performance ratio and an ability to encode in a highly parallelized environment. However, as technology continues to evolve, we are constantly evaluating different hardware configurations in order to continually hop ahead.

In October, we made the switch. After rigorous testing, and a major hardware investment, we kickstarted an ambitious new project to completely overhaul our transcoding cluster, and switch to the latest Intel i5-13500 CPUs. While this took a significant amount of resources, we doubled down with the goal of offering an exceptional service without relying on compromises.

The result? A staggering 60% increase in transcoding speed.

Building a better way to deliver online video

We built Bunny Stream as a platform to provide a better way to deliver online video, and for us, that mission never ends. We're excited to be able to share a glimpse into how we're making Bunny Stream better every day and continually pushing forward boundaries of performance to provide exceptional user experiences without all the complexities.

We have exciting plans ahead for Bunny Stream, and if you're passionate about video and streaming and would like to help make those a reality, make sure to check out our careers page.