CDN Caching

Introduction

A content delivery network (CDN) improves the end user’s website experience by reducing latency and loading times. This is achieved through the deployment of CDN caching. The concept of caching is very simple: the server makes a copy of an accessed/requested file, which is then served for subsequent requests.

When you browse the Internet using a web browser, it downloads files needed to display a web page correctly (e.g. images, videos and HTML files) from a web server that the website is hosted on (called the origin server). These files are stored on your drive and are “cached.”

The next time you visit the same web page using your browser, it can pull up these cached files from your computer’s disk immediately, making your website feel like it is loading instantly. The copied files remain cached for a set amount of time, or until you explicitly delete them from your computer.

CDN content caching is similar to browser caching, except that the copies of the files needed to display a website are not stored on your computer. Rather, they are stored on servers (called an edge server, or POP) around the world, so content is always geographically close to users. Even if the origin is located halfway around the globe, your browser can still fetch content from the edge server’s cache, resulting in an improved experience (even on first-time page loads).

Purging CDN cache content

When the origin server responds to the edge server’s request for a copy of the website’s content on the origin server (for the purpose of caching), the origin server usually also attaches time-to-live (TTL) information in its HTTP response header. This informs the edge server about how long it should retain a copy of the cached content. When the TTL (Time To Live) is up, the cached files are removed from the edge server. However, the user can also manually purge the cached files before the TTL is up. Some CDNs may purge the cached files earlier (before the TTL) if they have not been served for some time.

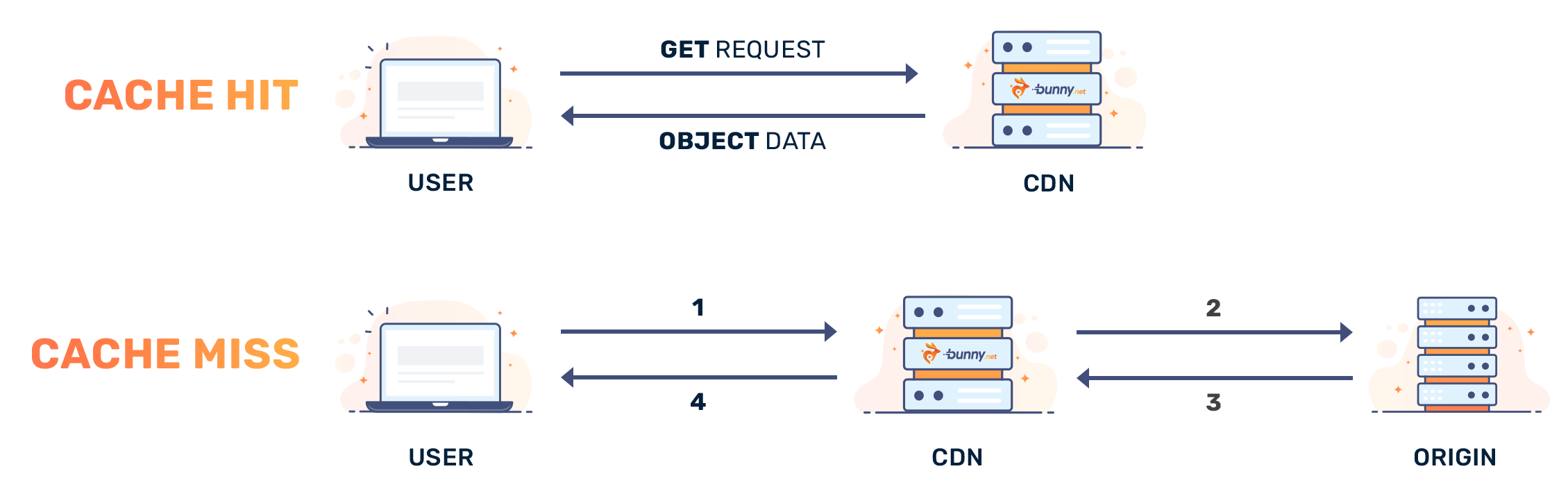

CDN Cache HITs and Cache MISSes

When you visit a website that uses a CDN to deliver its content, there are cases where a file has not yet been cached on the edge server. This is called a “Cache MISS” situation where data is not readily available on the edge server to be sent back to the client.

However, when data (files) are readily available in the edge cache, they can be sent back to the client directly, avoiding the small time penalty that occurs (when edge servers have to make a request back to the origin for a file).

Cache Hit Ratio

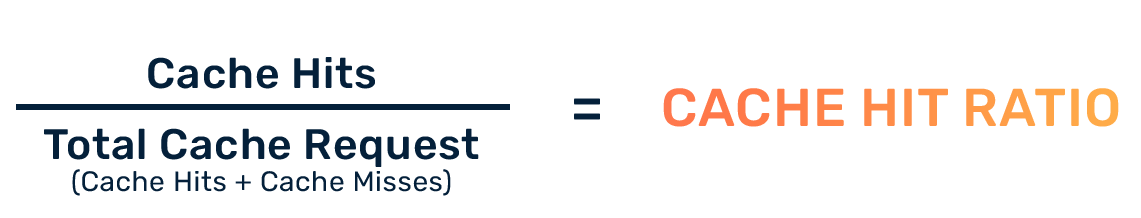

The cache hit ratio is one of the measurements of a CDN’s cache performance. It measures the proportion of the requests to the CDN’s servers that are “cache hits”. The formula for calculating the cache hit ratio is as follows:

To illustrate, let’s say that a CDN server logged 90 cache hits and 10 cache misses. The total cache requests would be 90 + 10 = 100. The cache hit ratio would be 90 / 100 = 0.9 or 90 percent.

Ideally, every request to the CDN’s server should result in a “HIT” for maximum performance. In reality, there will be hits and misses. A good cache hit ratio should range between 95 to 99 percent, which means that less than 5 percent of the requests go back to the origin server. When the cache ratio is high, the number of requests that go back to the origin server are low, reducing the load and bandwidth usage of the origin server.

However, a lower cache hit ratio may not necessarily be a problem. If the content of the website is highly dynamic, and changes often, the ratio can be ignored. However, in general, if the cache hit ratio looks low (and content is mostly static), it might be worth checking if the CDN service is working properly.