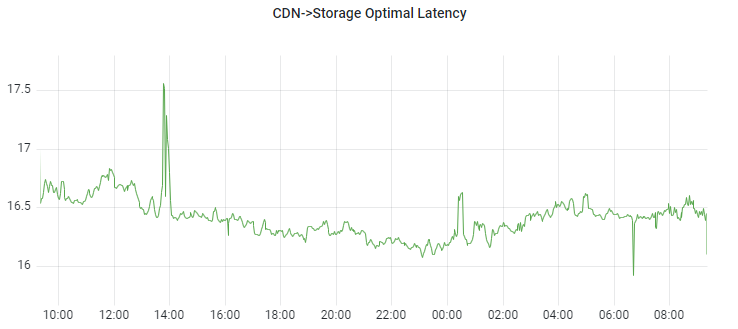

We keep calling Bunny Storage the fastest cloud storage in the world, and with the recent Johannesburg expansion, it's now running faster than ever. Thanks to the new region, Bunny Storage is now less than 17ms away from Bunny CDN on a global average, which makes Bunny Storage faster than even some CDNs.

Today, we want to share more about how we achieve, measure and optimize this incredible performance, and what goes into maintaining this on a global scale between 15 storage regions and 112+ CDN regions to deliver not only fast, but also consistently reliable experience for your users, for every request.

Realtime Routing Optimizations

The main component in making all of this work is the routing engine itself. When we initially launched Bunny Storage a few years ago, each CDN region was hard-coded with a routing table that instructed each edge node to connect to the appropriate region in a priority order.

However, the internet is highly dynamic. Routes between various peering points can shift on a daily basis and without warning, especially during major transit outages or cable cuts. That means a network route can turn from great to horrible in a matter of seconds.

As we started scaling the number of CDN and storage regions, this quickly became unsustainable to maintain, as well as damaging to the overall stability and performance of the system.

Today, every CDN node monitors its own routes to each of the storage regions in real-time. This allows us to perform real-time adjustments in routing for every request, from every server. So for example, the logs on one of our servers would then look something like this:

Edge Storage latency result for zone SG - Latency: 0ms Status: ONLINE Average latency: 0 ms Edge Storage latency result for zone HK - Latency: 45ms Status: ONLINE Average latency: 45 ms Edge Storage latency result for zone SYD - Latency: 88ms Status: ONLINE Average latency: 88 ms Edge Storage latency result for zone JP - Latency: 92ms Status: ONLINE Average latency: 92 ms Edge Storage latency result for zone DE - Latency: 163ms Status: ONLINE Average latency: 167 ms Edge Storage latency result for zone CZ - Latency: 165ms Status: ONLINE Average latency: 165 ms Edge Storage latency result for zone ES - Latency: 186ms Status: ONLINE Average latency: 186 ms Edge Storage latency result for zone WA - Latency: 187ms Status: ONLINE Average latency: 187 ms Edge Storage latency result for zone SE - Latency: 188ms Status: ONLINE Average latency: 187 ms Edge Storage latency result for zone UK - Latency: 189ms Status: ONLINE Average latency: 189 ms Edge Storage latency result for zone LA - Latency: 195ms Status: ONLINE Average latency: 195 ms Edge Storage latency result for zone NY - Latency: 256ms Status: ONLINE Average latency: 260 ms Edge Storage latency result for zone MI - Latency: 258ms Status: ONLINE Average latency: 258 ms Edge Storage latency result for zone BR - Latency: 343ms Status: ONLINE Average latency: 341 ms Edge Storage latency result for zone JH - Latency: 344ms Status: ONLINE Average latency: 340 ms

With the new system, we collect network information between thousands of different endpoints, and each server is aware of every storage region without relying on any external services. This gives accurate metrics as well as prevents a single point of failure, so each server can make decisions by itself and figure out the best destination to route to for every specific customer zone.

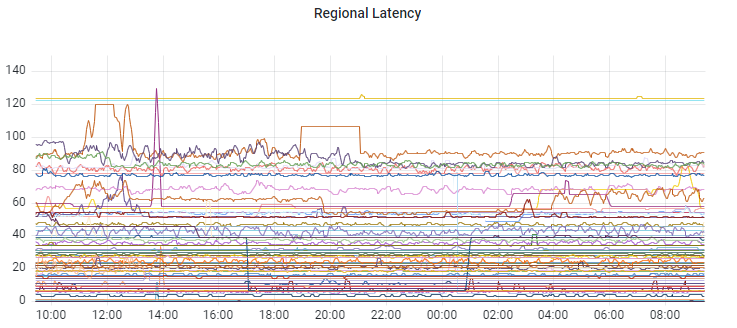

To give our infrastructure team an idea of what's going on, all of this data is then sent to Grafana. There, we can set alerts and monitor for any issues that would trigger a manual response from the team.

On top of the average global latency, we also monitor regional latency from each CDN PoP along with multiple other metrics such as server status, throughput, request times, and packet loss. In the end, all of this data is used to perform smart routing decisions and deliver reliable and consistent performance at all times.

Any regressions in performance are resolved within 60 seconds of being detected and a new, more optimal route is selected.

If we lose network connectivity within those 60 seconds, traffic is automatically retried to the next closest region to make sure we drastically reduce any failed requests.

Manual Routing Overrides

While automation and monitoring is key to every scalable, high-performance system, mistakes can happen, and sometimes things simply might not get detected. To work around that, we've built in a manual region override switch that allows us to steer traffic elsewhere if needed.

This allows us to work around any unpredictable issues or application level problems. This is where our infrastructure team comes into play. The Infra Bunnies keep an eye on the system around the clock and make sure any issues are immediately addressed.

While we don't resort to manual overrides often, they are there to act as a fail-safe when everything else fails.

Global Network Backbone

While smart traffic steering is great, what's even more important is the underlying network path itself. To achieve the absolutely lowest latency possible, Bunny Storage physical servers reside right next to our Bunny CDN hardware. This provides a sub-milisecond latencies between the two systems and in many cases delivers similar performance to cache HIT requests when requesting data from Edge SSD tier storage thanks to the 40 Gbit of connectivity per server.

However, not every CDN PoP in bunny.net is also a storage PoP, but we've made sure those are as fast as possible as well. Thanks to the efforts of our network partners over the past few years, the majority of Bunny Storage PoPs now connect to each other through a private global network backbone powered by multiple redundant 100 Gbit uplinks.

The backbone spans across North America, Europe, and Asia and provides us with clean and predictable connectivity between our edge network and the storage nodes, even in the case of an outage or issue with third party transit partners. We are continuing to expand the backbone to more and more regions around the world with a goal of one day connecting all of our regions through our own backbone.

Global Keep-Alive

Last but not least is the connection management between Bunny CDN and Bunny Storage. Setting up a new connection, especially over high latency can be detremental for the performance. As an example, connecting from Bangkok to Copenhagen increases the TTFB times by a whopping 110% when requesting a small text file from a completely new TCP connection.

Traditionally, coupling storage from one company and CDN from another can decrease connection reuse unless you're consistently serving large amounts of traffic globally.

By designing a custom connection management system and coupling Bunny CDN with Bunny Storage, we are able to maintain an always alive connection from each of our CDN nodes to each of the storage regions. This means that regardless of how often your content is accessed, we have a ready-set connection that is able to achieve a significant TTFB boost.

Our system will not only reuse these connections but also actively maintain them even when not in use.

It's an ongoing process!

We're excited to see the performance leaps we've managed to achieve with Bunny Storage that completely transformed the view on cold data storage. Today, Bunny Storage powers some of the fastest static websites on the planet, and we can't wait to make it even faster.

While an average latency of 17ms is impressive, we still see some outliers that are further away from our 15 storage regions.

Over the next few years, we will be working on a continued expansion for both the Standard and Edge SSD tier storage with a goal to reach a sub-30ms global coverage for 90+% of the world.

We are on a mission to make the internet hop faster, and we're excited to continue pushing the bar to the next level.

Help us hop even faster!

If you enjoy our mission of making the internet hop faster and would like to help power the next generation of internet applications, make sure to check out our careers page. We are looking for people truly excited by innovation and pushing the boundaries of performance to join our fluffle.